Ever have one of those days? You check your traffic, and the graph is heading south. There’s no obvious reason why. Your content feels strong, your backlinks look solid, but the numbers don’t lie. Something is wrong. I’ve been there, staring at that downward trend, and the feeling is just awful. More often than not, the villain is a silent SEO killer, an invisible problem that confuses search engines and waters down your authority. It’s called duplicate content. And if you’re not sure how to find and fix duplicate content issues, you’re leaving rankings on the table.

Forget the dense, jargon-filled guides. This is a playbook straight from the trenches. I’m going to show you exactly what this problem is, why it’s quietly sabotaging all your hard work, and give you a clear, step-by-step process to hunt it down and get rid of it for good. We’ll go over the tools, the tactics, and the mindset you need to get your website clean, authoritative, and climbing the search results where it belongs.

More in On-Page & Content SEO Category

How To Optimize For Featured Snippets

What Is Keyword Cannibalization

Key Takeaways

Before we get into the weeds, here are the core things to remember:

- Don’t Panic, It’s Not a Penalty: Google isn’t actively punishing you for duplicate content. The real issue is confusion. When Google gets confused, it splits your ranking power, which feels like a penalty.

- It’s Probably an Accident: Most of the time, this isn’t about stolen content. It’s the result of technical quirks—things like URL variations (HTTP vs. HTTPS), session IDs, or product filters on an e-commerce site.

- You’ll Need the Right Tools: A quick Google search can uncover the low-hanging fruit, but for a real audit, you’ll need to fire up tools like Google Search Console, Screaming Frog, or Ahrefs.

- The Fix is All About Clarity: Your goal is to point Google to the one “master” version of your page. You’ll do this mainly with 301 redirects and a nifty bit of code called the

rel="canonical"tag. - An Ounce of Prevention…: The best long-term strategy is to stop the problem before it starts with regular audits, smart internal linking, and a clean technical setup.

So, What Exactly Is Google Calling “Duplicate Content”?

Let’s get this straight right out of the gate. When an SEO like me starts talking about “duplicate content,” I’m not necessarily talking about some thief who copied and pasted your latest blog post. That’s a problem, for sure, but it’s not the one we’re focused on here. We’re talking about significant blocks of text—either within your own website or across different domains—that are identical or, in Google’s words, “appreciably similar.”

Put yourself in Google’s shoes for a second. Its entire mission is to deliver the single best result for a search. If it crawls your website and finds the same thousand-word article on five different URLs, it has to make a choice.

Which one is the real one?

That hesitation is where all the problems begin. It’s not just about perfectly copied text. This also covers:

- Lazy product descriptions: That classic e-commerce shortcut of using the same manufacturer’s description for dozens of similar products.

- Print-friendly pages: Creating a separate, stripped-down URL of a webpage that contains all the same text.

- Forgotten staging sites: Leaving a test version of your website online where search engines can find and index it.

- Messy URL variations: The same exact page being reachable from several different web addresses.

It’s any situation that makes Google tilt its head and think, “Wait a minute… I’ve seen this before.”

Why Should I Even Worry About a Few Similar Pages?

This is the million-dollar question. If it’s not a direct penalty that gets your site blacklisted, why go through all the trouble? Because the damage is real, even if it doesn’t come with a scary warning in your Google Search Console. It’s a slow, quiet bleeding of your site’s authority.

Is Google Going to Penalize My Site?

Let’s put this fear to bed. The “duplicate content penalty” is mostly a myth. Google’s own experts, like John Mueller, have said time and again that they understand a certain amount of this is normal. They aren’t on a witch hunt.

The only time you’d face a true, manual penalty is if you’re trying to cheat the system. We’re talking about intentionally scraping content from other sites or building a network of spammy domains all with the same text, purely to manipulate the rankings. For 99% of business owners, that’s not what’s happening. Your duplicates are almost certainly an honest mistake.

So, take a deep breath. You’re not in trouble. But that doesn’t mean you can ignore it.

How Does It Actually Hurt My SEO?

The harm comes from confusion, plain and simple. When a search engine finds multiple versions of your content, it creates a cascade of problems that hit your performance hard.

For starters, it doesn’t know which page to index. With three identical URLs, Google might just pick one at random to show in search results. Or it might index all three but have no clue which one you consider important, leading to the wrong one ranking—or none of them ranking well at all.

Then, your hard-earned link equity gets diluted. Backlinks are SEO gold. They’re votes of confidence. Now, imagine Page A and Page B are identical. A few great websites link to Page A. A few other great sites link to Page B. Instead of one powerhouse page with all that authority combined, you have two weaker pages. You’ve split your strength right down the middle.

Finally, you’re wasting your crawl budget. Every site gets a certain amount of Google’s attention—its “crawl budget.” If Google’s bots are spending that precious time crawling four different versions of the same product page, they have less time to discover and index the brand-new, unique blog post you just published. On a large site, this can be a major bottleneck.

Where Does This Duplicate Content Even Come From?

Figuring out the why is half the battle. You might be shocked to learn that in most cases, you’re the one creating the duplicates without even knowing it. The causes are usually technical and easy to overlook.

Are My URL Variations Creating a Mess?

This is culprit number one, and it’s not even close. A single piece of content can become accessible through a dozen different URLs. To a search engine, a new URL means a new page. Period.

I learned this lesson in the most painful way possible on my first real blog. I had finally moved my site over to HTTPS for security but completely forgot to properly redirect the old HTTP version. My traffic flatlined. I couldn’t figure it out. Then, after days of digging, the horrifying realization hit me: Google had indexed my entire site twice. Once at http://myfirstblog.com and again at https://myfirstblog.com.

Half my authority was pointing to one, half to the other. I had sawed my own website in half. It was a gut-wrenching, rookie mistake. A simple redirect fixed it, but the lesson was burned into my brain forever.

Watch out for these common URL-based duplicates:

- HTTP vs. HTTPS: Just like my story, Google sees these as two different websites.

- WWW vs. non-WWW:

www.yourdomain.comandyourdomain.comare not the same in Google’s eyes. - Trailing Slashes: To some servers,

yourdomain.com/page/andyourdomain.com/pageare unique. - Capital Letters:

yourdomain.com/Pagecan sometimes be treated as a different page fromyourdomain.com/page.

You need to pick one single, official format for your URLs and stick with it.

Could My E-commerce Site Be Its Own Worst Enemy?

Oh, absolutely. E-commerce sites are a minefield for duplicate content. The very features that customers love—sorting by price, filtering by color—can unleash technical SEO chaos. Each one of those options often creates a new URL with a parameter attached.

For instance, one category page can suddenly have URLs like:

www.examplestore.com/shoeswww.examplestore.com/shoes?sort=price_highwww.examplestore.com/shoes?color=blue

They all show basically the same content, but to Google, they’re all unique pages.

A few years back, I worked with a client who ran a furniture store online. Their traffic had fallen off a cliff, and they were panicking. My audit uncovered two huge problems. First, they were using the generic manufacturer descriptions for almost every product, meaning their pages were identical to their competitors’. Second, their filtering system was creating thousands of indexed URLs with parameters. It was an absolute disaster.

The recovery was a grind. We had to rewrite hundreds of product descriptions by hand to make them unique. At the same time, we rolled out a massive canonical tag strategy to tell Google that all those filtered URLs were just variations of the main category pages. It took nearly six months, but seeing their traffic finally start to climb again was one of the most satisfying moments of my career. It was proof of how deep this problem can run.

What About Content Syndication and Scrapers?

Sometimes the duplicate content is on someone else’s site. This can be intentional, like when you syndicate an article to a larger publication. It’s a great way to get more eyeballs on your work, but if you don’t set it up right, the syndicated copy can actually outrank your original.

And then there are the scrapers—bottom-feeding websites that just steal content to fill their pages. While Google is pretty smart about figuring out who the original author is, sometimes the scrapers get their copy indexed first, creating a temporary but infuriating problem.

Alright, I’m Convinced. How Do I Find These Duplicate Content Issues?

Time for the hunt. This is where you get to play detective on your own website. Using a mix of tools and techniques is the best way to get a full picture of what’s going on.

Can I Just Use a Few Simple Google Searches?

You bet. This is the perfect place to start. Google’s own search operators are your friend here.

The quickest check is to grab a unique sentence from one of your pages—something specific—and pop it into Google’s search bar inside quotation marks. Like this:

"This is a very specific sentence from my product page"

If more than one result from your own domain pops up, you’ve found an internal duplicate. If other websites show up, you might have a scraper or a syndication issue.

You can also use the site: operator to keep the search locked to your own website:

site:yourdomain.com "a unique sentence from a product description"

This is a great, free way to spot-check, but it won’t work for a full audit on a larger site. For that, you need to call in the big guns.

What Are the Best Tools for a Deeper Dive?

To do this right, you’ll need some specialized software. These tools see your site the way Google does.

- Google Search Console: Your first and most important stop. This is free data straight from the source. In the “Pages” report, look for any URLs flagged as “Duplicate without user-selected canonical” or “Duplicate, Google chose different canonical than user.” That’s Google literally telling you it’s confused.

- Screaming Frog SEO Spider: This is the tool for serious technical SEOs. It’s a program that crawls your entire website from top to bottom. Once it’s done, you can easily sort your pages and find any with identical page titles, meta descriptions, or H1 tags—all huge red flags for duplicate content.

- Ahrefs or Semrush: These big SEO platforms have fantastic site audit features that do a lot of the work for you. They’ll crawl your site and spit out a report that clearly identifies any duplicate or near-duplicate content issues.

- Copyscape: This one is your go-to for finding external duplication. You paste in a URL from your site, and it scours the internet to see if that text exists anywhere else. It’s the fastest way to find out if you’re being scraped.

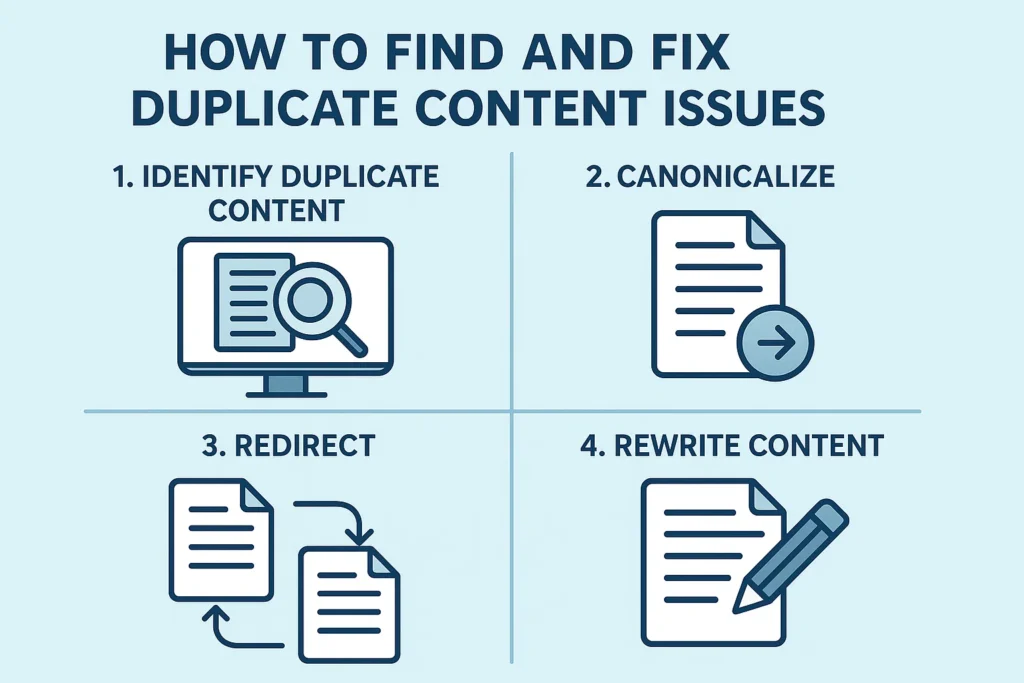

I’ve Found the Duplicates. Now How Do I Actually Fix Them?

Once you have your list of problem URLs, you can start fixing them. The mission is simple: for every piece of content, you need to tell Google, in no uncertain terms, which URL is the real one.

Which Page Should I Keep? Choosing Your “Canonical” Version

First, you have to make a choice. For each group of duplicate URLs, you need to pick one to be the “canonical” version—the master copy, the one you want to rank.

So, how do you pick?

- Which URL already gets the most traffic?

- Which one has the best backlinks pointing to it?

- Which one is linked in your main navigation or XML sitemap?

- Which URL just looks cleaner and more user-friendly?

Usually, the choice is obvious. You want to consolidate all your power onto the strongest existing page. That’s your canonical. Everything else is a duplicate that needs to be dealt with.

What’s the Best Way to Tell Google Which Page to Rank?

You’ve got three main tools in your arsenal. The one you choose depends on the situation.

1. The 301 Redirect A 301 redirect is a permanent forward from one URL to another. It’s the best choice when a duplicate page has no reason to exist on its own. The HTTP to HTTPS example is a perfect case. You never want a user or a search engine to land on the old, insecure version, so you redirect them. A 301 redirect also passes most of the link equity from the old URL to the new one, making it the cleanest way to consolidate your SEO power.

2. The rel="canonical" Tag This is the Swiss Army knife for fixing duplicate content. It’s a tiny piece of code you add to the <head> section of a webpage that says, “Hey Google, this page is just a copy. The real, master version is over at this other URL. Please send all ranking power there.” It looks like this:

<link rel="canonical" href="https://www.yourdomain.com/the-real-page" />

This is the ideal fix for e-commerce filters and tracking parameters. The user can still land on the page with all the filters applied, but the canonical tag ensures that only the main, clean category page gets any love from Google.

3. The Meta Robots Noindex Tag Sometimes you have pages you need to keep but that have zero value for search engines—like a “thank you for your purchase” page or an internal search results page. For these, you can use a noindex tag.

<meta name="robots" content="noindex">

This tag tells search engines they can crawl the page, but they should not, under any circumstances, add it to their search index. It’s a simple way to keep your search results clean.

How Can I Manage URL Parameters Better?

This is where the canonical tag truly shines. Even though the old URL Parameters tool in Search Console is gone, you still need to control the mess that filtering and tracking can create. The modern best practice is to ensure that every single URL with a parameter has a canonical tag on it that points back to the clean, parameter-free version of the page. This tells Google to ignore all the messy variations and focus only on the one URL that matters.

What About Preventing Duplicate Content in the Future?

Fixing the mess is one thing. Making sure it never happens again is another. A proactive strategy is the best way to keep your site healthy in the long run.

Can I Build a Content Strategy That Avoids Duplication?

You sure can. It starts with a simple commitment: create unique content. If you run an e-commerce site, that means writing your own product descriptions. Don’t just copy the manufacturer’s text. Tell a story. Sell the product.

If you run a blog, always bring your own angle. Don’t just rehash what the top ten results already say. Add your own data, your own experiences, your own voice. Before creating any new page, ask one simple question: “Do I already have a page that does this?” If the answer is yes, update that existing page instead of creating a new one. This also prevents keyword cannibalization, which is just another form of duplicate content.

What Are Some Technical Best Practices to Follow?

Technically, it all comes down to consistency. Make sure all your internal links point to the one, canonical version of a URL. Set up your site-wide redirects correctly from day one. And most importantly, make SEO audits a regular part of your routine. Check for duplicate content issues every few months. Catching them early is infinitely easier than fixing a massive, site-wide problem a year from now.

Take Control of Your Content

Duplicate content can seem like this big, scary, technical monster. It’s not. It’s a common problem with logical solutions. Once you understand where it comes from and how to use your tools—redirects, canonicals, and noindex tags—you can take back control of your website.

This is about focusing your website’s authority. It’s about making sure every page and every backlink is pulling its weight. You have the playbook. Now go get your site clean and claim the rankings you’ve earned.

FAQ

Does Google penalize websites for duplicate content?

Google does not typically penalize websites for duplicate content unless it is part of manipulative practices. Usually, Google understands that some duplication is natural, but it can cause confusion and reduce your site’s search visibility.

What is duplicate content in SEO and why does it matter?

Duplicate content in SEO refers to significant blocks of text that are identical or very similar across different URLs on your website or across domains. It matters because it confuses search engines, dilutes your site’s authority, and can negatively impact your rankings.

How does duplicate content harm my SEO efforts?

Duplicate content harms SEO by causing confusion for search engines about which page to index, diluting your link equity across multiple pages, and wasting crawl budget, which limits the discovery of new, unique content.

What are common causes of duplicate content on a website?

Common causes include URL variations like HTTP vs. HTTPS, WWW vs. non-WWW, trailing slashes, capital letter differences, duplicate product descriptions, printer-friendly pages, staging sites, and URL parameters from filters or tracking.

How can I identify and fix duplicate content issues effectively?

Identify duplicates using tools like Google Search Console, Screaming Frog, or Ahrefs, and fix them by choosing a canonical page, implementing 301 redirects, or using the meta robots noindex tag to tell search engines which page to prioritize.