You’ve poured your heart into it. The content is killer, the website looks sharp, and you’ve hustled to get the word out. So why is your traffic graph so stubbornly flat? Or maybe it’s even starting to dip. It feels like you’re yelling into a hurricane, and Google isn’t even turning its head. What is going on?

Nine times out of ten, the problem isn’t your content. It’s the stuff you can’t see. Your website has a technical foundation, and just like a house, tiny cracks in that foundation can threaten the whole structure. This is where a real audit comes in. It’s your flashlight in a dark room. I know, the phrase “technical SEO audit” sounds horribly boring and complicated. It makes you think of spreadsheets with a million rows and code that makes your eyes glaze over.

We’re not doing that today.

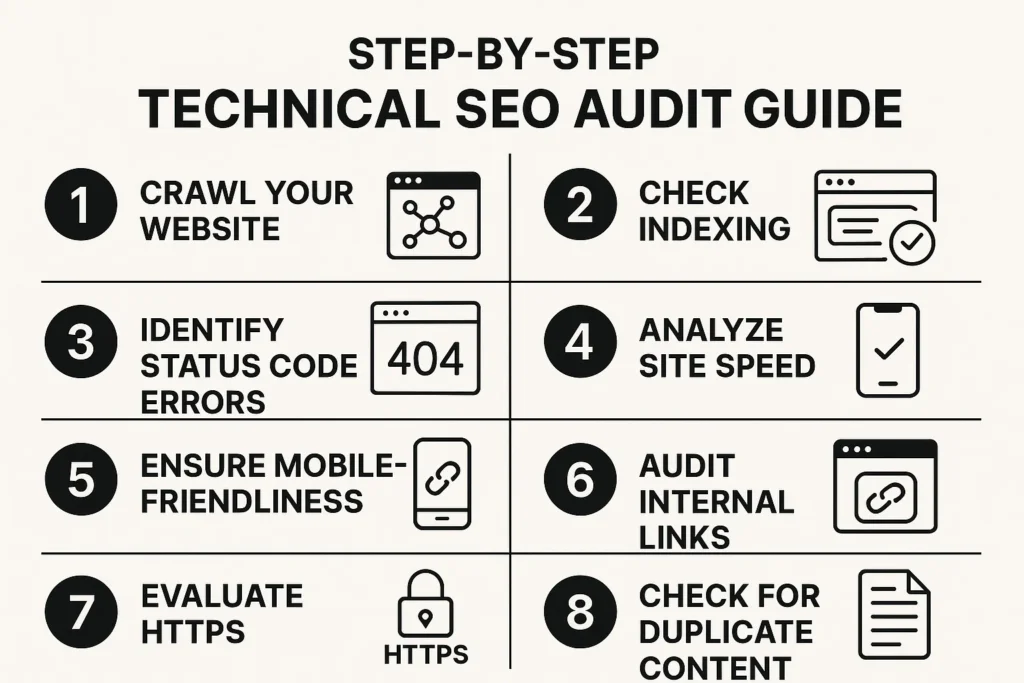

This isn’t going to be another dry, jargon-stuffed manual. This is a practical, step-by-step technical SEO audit guide that will actually make sense. I’m going to pull back the curtain on the exact process I follow, showing you how to spot the errors that are costing you traffic and how to fix them. We’re going to translate the geek-speak into a straightforward plan. You’ve got this.

More in Technical SEO Category

Key Takeaways

- Foundation First: Technical SEO is the concrete slab your entire site is built on. If it’s shaky, nothing else you build on top will be stable.

- Get Found: The goal is simple: let Google crawl your site easily, understand what it’s about, and show it to the right people.

- Speed Kills (Slowness, That Is): A slow site doesn’t just annoy visitors; it actively costs you money and rankings. Speed is a feature, not a bonus.

- Stop Confusing Google: Duplicate pages and messy signals split your SEO power. The clearer you are, the better you’ll rank.

- Modern Rules: Your site has to be secure (HTTPS) and work perfectly on a phone. In today’s world, this is non-negotiable.

So, Where Do You Even Begin with a Technical SEO Audit?

Starting an audit feels overwhelming. You know what you want to achieve, but the first step is a mystery. The trick is to get organized before you dive in. Think of it as prepping your kitchen before you start cooking. This setup phase is what separates a focused, useful audit from a chaotic mess. It’s boring, but don’t skip it.

What Tools Should You Have in Your Arsenal?

Forget about the expensive, complex software suites for now. You can get 90% of what you need for a world-class audit with just three key tools.

Here’s your starter kit:

- Google Search Console (GSC): This is your direct line to Google, and it’s completely free. It’s how Google tells you what it thinks of your site—the errors it sees, the keywords you’re showing up for, and what’s getting indexed. If you don’t have this set up, you’re flying blind. Stop and get it done.

- A Website Crawler: Think of this as your personal detective. A crawler like Screaming Frog SEO Spider (I love it, and the free version is amazing for sites under 500 pages) or the audit tools in Ahrefs/Semrush will trace every single link on your site, just like Google does. It reports back with everything it finds, good and bad.

- A Page Speed Tool: Use Google PageSpeed Insights. It’s free, it uses Google’s own data, and it gives you clear, actionable advice on Core Web Vitals. It doesn’t just give you a score; it hands you a specific to-do list to get faster.

With these three in your corner, you’re ready to find almost any technical problem.

How Do You Set a Baseline for Your Audit?

How will you know if you’re making progress if you don’t know where you started? Before you touch a thing, take a snapshot of your site’s health right now. This is your “before” photo.

Open a spreadsheet—your “Audit Master” sheet. In here, you’ll log a few key numbers from Google Analytics and Search Console for the last month or so. Jot down your total organic traffic, top 5 keywords and their ranks, total indexed pages from GSC, and any crawl errors it’s currently showing.

Now, run your first crawl with Screaming Frog. Don’t try to fix anything yet. Just export the reports for 404 errors, redirects, pages with noindex tags, and your title tags. Save them. This is your starting point. Seeing the number of errors in these reports shrink as you work is one of the most satisfying parts of the process.

Is Google Actually Seeing Your Website Correctly?

This is the most important question. If Google can’t find and understand your pages, you’re invisible. It’s that simple. The worst traffic drops I’ve ever had to fix almost always came back to a basic crawling or indexing problem. You have to nail this. It’s making sure the front door is unlocked and the “Open” sign is on.

How Can You Check if Your Key Pages Are Indexed?

Let’s start with a quick and dirty check. Go to Google and search for site:yourwebsite.com. This tells Google to only show you results from your website. Is the number of results it shows way off from the number of pages you actually have? A big difference, either high or low, is a huge red flag.

For the real story, head to Google Search Console. The “Indexing > Pages” report is the truth. It shows what’s indexed and, more importantly, why other pages aren’t. It will tell you if pages are blocked, if Google crawled them but chose not to index them, or if they’re just plain broken (404s). This report is a gold mine.

Are You Accidentally Blocking Google with Robots.txt?

Your robots.txt file is a simple text file that gives search engines rules about what they can and can’t look at on your site. It’s located at yourwebsite.com/robots.txt. It’s a very powerful file, which means it’s also very easy to mess up spectacularly.

One little line—Disallow: /—can tell Google to ignore your entire website. It happens.

Check that file right now. Look for any Disallow rules. Are you blocking something important by mistake? A simple Disallow: /blog/ could be hiding all your articles. If you’re not sure, use the Robots.txt Tester in Google Search Console to double-check that your important pages are accessible.

Have Rogue “Noindex” Tags Sabotaged Your Rankings?

This one still gives me nightmares. A few years back, an e-commerce client came to me in a total panic. Their organic traffic had tanked, falling nearly 80% in two weeks. They had a huge site with thousands of products and no idea what went wrong.

I fired up my crawler and immediately saw it. One. Single. Line. Of. Code.

A developer had accidentally left a noindex tag on the main product page template during a small site update. That tag is a direct order to Google: “Don’t show this in search.” Because it was on the template, it was on every single product page. They were telling Google to erase their business from the search results.

We removed that tag, and traffic started to rebound within a week. It was a stark reminder that a few characters of code can cost a company hundreds of thousands of dollars.

Use your crawler to hunt for noindex and nofollow directives. It will give you a list of every URL containing these tags. Go through that list carefully. If your homepage, your key service pages, or your best-selling products are on it, you’ve found a five-alarm fire. Fix it now.

Does Your XML Sitemap Give Google a Clear Roadmap?

If your website is a city, an XML sitemap is the clean, easy-to-read tourist map you hand to Google. It’s a list of all the important pages you want it to know about. Google is smart, but a sitemap makes its job easier and ensures it doesn’t overlook a key part of your site.

First, locate your sitemap (usually at yourwebsite.com/sitemap.xml). Make sure that URL is submitted in Google Search Console under “Sitemaps.” GSC will tell you if there are any errors with it.

Second, your sitemap needs to be clean. It should only contain high-quality, indexable URLs. That means no pages that are:

- Redirecting (3xx status codes)

- Broken (4xx status codes)

- Blocked by

robots.txt - Tagged with

noindex

Crawl the URLs from your sitemap file. Every single one should come back with a “200 OK” status. A sitemap full of junk URLs is like a map full of dead-end streets; it just wastes Google’s time.

Is Your Site Architecture Confusing Both Users and Search Engines?

Good site architecture is like a well-organized grocery store. You can easily find the bread, the milk, and the eggs because everything is in a logical place. Bad architecture is a chaotic maze that frustrates everyone, including the search engines trying to figure out what’s important.

How Deep Do Users Have to Click to Find Important Content?

“Click depth” refers to the number of clicks it takes to get from the homepage to another page on your site. The homepage has a click depth of 0. Pages linked directly from the homepage have a click depth of 1, and so on.

Why does this matter? As a general rule, the fewer clicks it takes to reach a page, the more important Google perceives that page to be. Your most critical pages (your main services, top-selling products, cornerstone content) should be easily accessible, ideally within three clicks of the homepage.

You can find this information in your site crawler’s report. Screaming Frog, for example, has a “Crawl Depth” column for every URL it finds. Sort this column from largest to smallest. Are any of your high-priority pages buried at a click depth of 4, 5, or even deeper? If so, you need to rethink your internal linking strategy. Consider adding links to these deep pages from more authoritative pages, like your homepage or main category pages, to make them more prominent and accessible.

Are Your URLs Simple and Clean, or Long and Messy?

Your URLs are one of the first things a user and a search engine see. They should be simple, descriptive, and easy to read. A clean URL structure provides context and improves user experience.

Consider these two examples:

- Bad:

yourwebsite.com/cat.php?id=3&sid=98a7sd8f9 - Good:

yourwebsite.com/services/technical-seo-audits

The good URL is immediately understandable. It tells you exactly what the page is about. The bad URL is a meaningless string of parameters.

When auditing your URLs, look for the following:

- Simplicity: Are they clean and readable?

- Keywords: Do they include relevant keywords that describe the page’s content?

- Format: Are you using hyphens (

-) to separate words instead of underscores (_) or spaces? Hyphens are the standard and are treated as word separators by Google.

Your crawler will provide a full list of all URLs on your site. Scan this list for long, ugly, parameter-filled URLs. These are often candidates for rewriting. If you do change your URL structure, be sure to implement 301 redirects from the old URLs to the new ones to preserve link equity and prevent broken links.

Do You Have a Breadcrumb Trail for Easy Navigation?

Breadcrumbs are the little navigational links you often see at the top of a page, like Home > Services > Technical SEO. They serve two excellent purposes.

First, they dramatically improve user experience. They show users exactly where they are on your site and provide an easy, one-click path back to previous categories or the homepage. This reduces bounce rates and keeps people engaged.

Second, they help search engines understand your site’s structure. Breadcrumbs reinforce your hierarchy and spread link equity throughout your site in a logical way. If you have an e-commerce site or any website with multiple layers of categories, breadcrumbs are an absolute must. Check your key pages. Is this simple navigational aid present? If not, implementing it is a quick win for both users and SEO.

Could Your Website’s Speed Be Costing You Visitors?

Let’s face it. We live in an impatient world. If a page takes more than a few seconds to load, we’re gone. Page speed is no longer a “nice to have”; it’s a fundamental aspect of user experience and a confirmed Google ranking factor. A slow website will actively drive away potential customers and tell Google that your site provides a poor experience.

What Are Core Web Vitals and Why Should You Care?

Core Web Vitals are a specific set of metrics that Google uses to measure a page’s real-world user experience. They are made up of three main components:

- Largest Contentful Paint (LCP): How long does it take for the largest element on the page (usually an image or a block of text) to load? This measures loading performance.

- First Input Delay (FID) / Interaction to Next Paint (INP): How long does it take for the page to respond when a user first interacts with it (e.g., clicks a button)? This measures interactivity. (Note: INP is replacing FID in 2024).

- Cumulative Layout Shift (CLS): Does the page layout jump around as it loads? This measures visual stability.

You don’t need to be a developer to understand this. Just know that Google is measuring these factors, and poor scores can hurt your rankings. You can find your site’s Core Web Vitals report directly in Google Search Console.

How Can You Pinpoint Exactly What’s Slowing You Down?

This is where Google PageSpeed Insights comes in. Enter your URL, and the tool will analyze your page and give you a performance score for both mobile and desktop. But don’t fixate on the score. The real value is in the “Opportunities” and “Diagnostics” sections below it.

This is your personalized to-do list for speeding up your site. It will point out the exact issues, such as “Eliminate render-blocking resources,” “Properly size images,” and “Reduce initial server response time.”

I once spent weeks trying to improve the speed of a personal blog I was building. The score was stuck in the “red” on mobile, and I was getting frustrated. I finally dug into the PageSpeed Insights report and saw the number one issue was a massive, unoptimized hero image I was using on every page. I thought I had compressed it, but I hadn’t done it properly. It was nearly 2MB. I ran it through an online compression tool, replaced the file, and my score instantly jumped by 30 points. Sometimes the biggest wins are the simplest fixes staring you in the face.

Are Your Images Weighing Down Your Pages?

Speaking of images, they are one of the most common culprits of slow-loading pages. High-resolution images look great, but they are often huge files that take a long time to download, especially on a mobile connection.

Your technical audit must include a check on image optimization. Using your crawler, you can often find images with large file sizes. Look for anything over 100-150 KB. These are prime candidates for optimization.

Here are the key things to fix:

- Compression: Use tools like TinyPNG or ImageOptim to shrink image file sizes without sacrificing too much quality.

- Modern Formats: Serve images in next-gen formats like WebP, which offers superior compression compared to traditional JPEGs and PNGs.

- Lazy Loading: Implement lazy loading, which means images outside of the initial viewport only load as the user scrolls down to them. This dramatically speeds up the initial page load time.

Are You Sending Mixed Signals with Duplicate or Thin Content?

Search engines want to show their users unique, valuable content. When they encounter multiple versions of the same page or pages with very little useful information, it creates confusion. It forces Google to decide which version to rank (if any) and can dilute the authority of your pages, ultimately harming your rankings.

How Do You Hunt Down and Eliminate Duplicate Content?

Duplicate content can arise for many technical reasons, often without you even realizing it. For example, search engines may see these as separate, duplicate pages:

http://yourwebsite.comhttps://yourwebsite.comhttp://www.yourwebsite.comhttps://www.yourwebsite.com

To you, it’s all one website. To Google, it’s four potential versions of your homepage. Similar issues can occur with trailing slashes (/page vs. /page/) and with URL parameters used for tracking or filtering.

The primary solution for this is the canonical tag (rel='canonical'). This is a snippet of HTML code that tells search engines which version of a URL is the “master” copy. Any duplicate versions should have a canonical tag pointing to this master URL. This consolidates all the ranking signals (like links) into one single, authoritative page.

Your site crawler is your best friend here. It can identify pages with missing or incorrect canonical tags. It can also help you find instances of duplicate title tags and meta descriptions, which are often strong indicators of duplicate content issues.

What’s the Deal with Thin Content and How Do You Fix It?

Thin content refers to pages that have very little or no valuable content. Think of things like doorway pages, pages with just a few lines of text, or very similar product pages. These pages offer a poor user experience and can lead to algorithmic penalties from Google.

Use your crawler to identify pages with a low word count. While word count isn’t everything, a page with only 50 words is unlikely to be very helpful.

Once you’ve identified these thin pages, you have a few options:

- Improve it: The best option is to flesh out the content. Add more detail, images, and useful information to make the page a valuable resource.

- Consolidate it: If you have several thin pages covering very similar topics, consider combining them into one comprehensive “power page.” Be sure to 301 redirect the old URLs to the new one.

- Remove it: If the page serves no real purpose for users or SEO, consider deleting it and letting it return a 404 error (or 410 “Gone”).

Is Your Site Secure and Mobile-Friendly for Today’s Users?

We’ve moved beyond the days when these were optional extras. Today, website security and mobile-friendliness are table stakes. Google operates on a mobile-first index, meaning it primarily uses the mobile version of your site for ranking. And it actively flags sites that aren’t secure. Getting these wrong isn’t just bad for SEO; it’s bad for business.

Is Your HTTPS Implementation Flawless?

HTTPS is the secure version of HTTP. The “S” stands for secure, indicating that data passed between your website and the user’s browser is encrypted. You can tell a site is secure by the little padlock icon in the browser’s address bar.

Having an SSL certificate and using HTTPS across your entire site is a must. It’s a small ranking signal, but more importantly, it builds trust with users.

However, just having the certificate isn’t enough. You need to ensure it’s implemented correctly. The most common issue is “mixed content.” This occurs when an HTTPS page loads some of its resources (like images, scripts, or stylesheets) over an insecure HTTP connection. This breaks the security of the page and can cause browsers to show a warning to users.

Use your crawler to find mixed content issues. It can scan your HTTPS pages and flag any resources being loaded over HTTP. Fixing these is usually as simple as changing the resource URL from http:// to https://. You should also ensure that all HTTP versions of your site automatically 301 redirect to the HTTPS version. For more on this, you can review Google’s own documentation on securing your site with HTTPS.

How Does Your Site Look and Feel on a Smartphone?

Go ahead, pick up your phone and browse your own website. Don’t just look at the homepage. Navigate through your services, read a blog post, try to fill out a contact form. Is it an easy, pleasant experience? Or do you have to pinch and zoom? Are the buttons too small to tap?

As mentioned, Google now uses mobile-first indexing. This means the mobile version of your site is the one that matters most for ranking. A poor mobile experience will directly harm your visibility.

The best place to start your mobile audit is with Google’s Mobile-Friendly Test. This free tool will analyze your URL and tell you if it meets Google’s standards for mobile usability. Beyond that, Google Search Console has a “Mobile Usability” report that will flag site-wide issues, like “Text too small to read” or “Clickable elements too close together.”

Conclusion: Your Audit Is a Compass, Not Just a Map

Completing a technical SEO audit is a massive accomplishment. It transforms the invisible and confusing aspects of your website into a clear, prioritized list of actions. You’ve moved from guessing to knowing. This guide has equipped you with the process and tools to take control of your site’s technical health, laying a foundation that allows your great content to finally shine.

But remember, a technical audit is not a one-and-done task. It’s a living process. Websites evolve, new content is added, and search engine algorithms change. Think of your first audit as drawing the map. Regular check-ins, perhaps quarterly, are like checking your compass to ensure you’re still heading in the right direction. By making technical SEO a regular part of your routine, you ensure your foundation remains solid, ready to support any marketing goal you set for yourself. Now go, find those errors, and fix them. Your future traffic will thank you.

FAQ

What is the importance of a technical SEO audit for my website’s performance?

A technical SEO audit is crucial because it identifies invisible, technical issues that can negatively impact your website’s visibility and ranking on Google, ensuring your content reaches the right audience.

What are the essential tools needed to conduct a basic technical SEO audit?

The three essential tools are Google Search Console, a website crawler like Screaming Frog SEO Spider, and Google PageSpeed Insights, which together help identify errors, crawl issues, and speed optimizations.

How do I establish a baseline before starting my SEO audit?

Before making any changes, take a snapshot of your site’s current health by recording key metrics in Google Analytics and Search Console, and run a crawl with Screaming Frog to document existing errors and issues.

How can I verify if Google is properly indexing my website?

You can check by searching ‘site:yourwebsite.com’ on Google to see the number of indexed pages or use Google Search Console’s Indexing report to understand which pages are indexed and why others might not be.

What should I look for to improve my website’s speed and ranking potential?

Focus on optimizing Core Web Vitals like loading performance, interactivity, and visual stability by analyzing reports in Google Search Console and PageSpeed Insights, fixing issues such as large images, unoptimized code, and server response times.