Your server has a diary.

And it writes down everything. Every visitor. Every request. Every single error. This diary is your server log file. For years, I’ll be honest, I completely ignored it. I mean, why bother, right? I had Google Search Console. I had Google Analytics. I had my rank tracker. What else could I possibly need?

I figured log files were just for the “IT guy.” A messy, technical text file that had zero to do with my job as an SEO.

I was dead wrong.

My big “a-ha” moment hit me while I was working on a massive e-commerce site. We’d launch new products, and they would just sit there for weeks, not getting indexed. Our rankings on existing pages were slipping. All GSC would tell me was “Discovered – currently not indexed,” which is about as helpful as a screen door on a submarine. We were stuck.

Finally, out of pure desperation, I figured out how to get the log files. What I found was a gut punch. Googlebot was spending 80% of its time crawling old, parameterized URLs from our faceted navigation—URLs that were supposedly blocked by robots.txt (spoiler: it was hitting them anyway). It was also crawling a staging site we thought was firewalled.

It was barely even looking at our new product pages.

That was the day I realized: all my other tools were showing me what Google reported. The log files showed me what Google actually did. Learning how to do log file analysis for SEO wasn’t just some “nice to have” skill; it was the key to the entire kingdom.

If you’re ready to stop guessing and start knowing what’s really happening on your site, you’re in the right place.

More in Technical SEO Category

Key Takeaways

- Log files are the 100% unfiltered truth. GSC is a nice summary. It’s also sampled and delayed. Your log file is the raw, real-time record of every single thing that hits your server. No exceptions.

- It’s all about the crawl budget. This is the only way to see how Google is spending its limited time (crawl budget) on your site. You’ll see exactly what it crawls, how often, and—most importantly—what it wastes time on.

- You don’t need to be a coder. The files look nasty, I get it. But modern tools do all the heavy lifting. The real skill is just learning which questions to ask.

- This is your ultimate diagnostic tool. Logs show you the why behind your worst SEO problems: terrible indexation, 404 errors Google keeps finding, 5xx server errors, and crawl budget being torched on redirect chains.

- You need a real tool. Do not try to open a 1GB log file in Excel. It will crash. You need a dedicated tool like Screaming Frog Log File Analyser to make sense of the data.

What Are Server Logs, and Why Do They Feel So Intimidating?

Let’s just get this out of the way. Server logs look scary.

The first time you open one, it looks like a scene from The Matrix. Just an endless, cascading wall of text, numbers, and IP addresses. It feels like you’re not supposed to be in there. Like you wandered into the building’s server room and might accidentally unplug the whole company.

But here’s the secret: it’s just a list. That’s it.

Think of your server as a ridiculously busy restaurant. Every time a customer (a user’s browser) or a delivery driver (Googlebot) comes to the door, the host at the front podium writes down exactly what happened in a big ledger.

- “A customer from 123.45.67.89…”

- “…at 10:30:01 AM…”

- “…asked for the menu at

/our-best-product…” - “…and I gave it to them (Code 200).”

That ledger is your server log. It’s not magic. It’s not complex code. It’s just a chronological, line-by-line record of every single request. The intimidation factor is purely about the volume of data, not the complexity of a single line.

Where Do These Log Files Actually Come From?

Your website doesn’t just float in the cloud. It lives on a real (or virtual) computer called a server. That server runs software like Apache, NGINX, or Microsoft IIS. This software is the program that “serves” your website’s files to visitors. It’s the “host” at the restaurant podium.

And every single time it hands over a file—an HTML page, an image, a CSS file, a PDF, anything—it makes a new entry in a plain text file, usually called an access.log. This file just sits there on the server, growing larger and larger, line by line, second by second, recording everything.

What Does a Single Line in a Log File Even Mean?

Okay, let’s pull one of those scary-looking lines apart. It’s much simpler than you think. A standard “Combined” log format (which is super common) looks something like this:

123.45.67.89 - - [22/Oct/2025:10:30:01 +0100] "GET /my-seo-page HTTP/1.1" 200 1543 "https://www.google.com/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36"

Let’s translate that into plain English:

123.45.67.89(The IP Address): This is who asked. The unique address of the visitor, whether it’s a person or a search engine bot.[22/Oct/2025:10:30:01 +0100](The Timestamp): This is when it happened, right down to the second."GET /my-seo-page HTTP/1.1"(The Request): This is what they wanted.GETjust means “give me this page.”/my-seo-pageis the URL they asked for.200(The Status Code): This is the single most important part for us. It’s the server’s answer.200means “OK, here’s the page.”1543(The Size): The size of the file that was sent, in bytes."https://www.google.com/"(The Referrer): This is how they found the page. In this case, they clicked a link from Google."Mozilla/5.0..."(The User-Agent): This is the visitor’s “signature.” It’s a text string that identifies what browser (Chrome, Safari) or bot (Googlebot, Bingbot) is asking.

See? Not so bad. It’s just a sentence, written in a specific format. You now know how to read a server log.

Isn’t My Google Search Console Data Enough?

This is the question I get most. It’s the same one I asked for years. Why go through all this trouble when GSC has a “Crawl Stats” report?

It’s a fair question. But it’s based on a fundamental misunderstanding.

Using GSC for crawl analysis is like asking a fisherman for a report on the ocean’s health. He can give you a decent summary. He’ll tell you what he caught, where he saw some other boats, and what the weather was like. It’s useful, it’s accurate (from his view), and it’s a great overview.

Using log files is like putting on a deep-sea diving suit with a high-powered flashlight and exploring the entire ocean floor yourself. You see everything. The fish he missed. The garbage on the seafloor. The shipwrecks. The exact currents.

GSC data is a sampled, summarized, and often-delayed report from Google’s point of view. Log files are the complete, raw, real-time truth from your server’s point of view.

What’s the Real Difference Between GSC and Log Files?

Sampling. The GSC Crawl Stats report is not a complete log. Google tells you this. It’s a simplified overview to help you spot major issues. It won’t show you every single crawl, every 404 error, or every 301 redirect.

Here’s a real-world example: GSC might show you a chart of 404 errors and list 10-15 example URLs. Your log files, on the other hand, might reveal that Googlebot is actually hitting 5,000 different 404 URLs, many of them tiny variations of the same parameter, wasting thousands of crawl requests every single day.

You would never see that in Search Console.

Log files are the only source of truth for 100% of crawl activity. This isn’t my opinion; it’s a technical fact.

What’s This “Crawl Budget” Everyone Talks About?

This is the whole reason we’re doing this. “Crawl Budget” sounds like a fancy SEO buzzword, but it’s a really simple idea.

Google does not have infinite time or resources. It cannot, and will not, crawl every single page on your site every single day. It allocates a “budget” of time and server resources for your site based on its authority, size, and freshness.

If you have a small 50-page blog, Google might stop by for a few minutes every couple of days. If you’re The New York Times, Google has practically moved in and is living on your server 24/7.

The problem is when that budget gets wasted.

Is Google Wasting Its Time on My Site?

For most sites, the answer is a resounding “yes.”

Googlebot is a machine. It just follows links. If you have a broken internal link from a blog post you wrote in 2015, Googlebot will keep following that link and hitting a 404 page. Forever. If you have a messy redirect chain (Page A -> B -> C -> D), Googlebot will follow that entire chain, wasting 3-4 crawl requests when it should have taken one.

It will crawl your faceted navigation (/products?color=red&size=large). It will crawl your staging site if it’s not password-protected (like mine was!). It will crawl old login pages, expired coupons, and image attachment pages.

Every single second it spends crawling that junk is a second it doesn’t spend crawling your brand-new, money-making product page or your brilliant new blog post.

How Can Log Files Help Me Optimize My Crawl Budget?

This is the payoff. By analyzing your log files, you can build a precise map of where Google’s time is going. You can definitively answer questions that are impossible to answer otherwise:

- What percentage of Google’s crawls are hitting 200 (OK) pages vs. 404 (Not Found) or 503 (Server Error) pages?

- How often is Google really crawling my most important “money” pages? (Is it daily? Weekly? Never?)

- Is Google crawling pages that are blocked by robots.txt? (You’ll be shocked).

- Is Google actually finding and crawling my XML sitemaps?

- What’s the crawl frequency of my high-priority pages vs. my low-priority “junk” pages?

Once you see that 40% of your crawl budget is being torched on 404s and redirect chains, you have a clear, actionable to-do list. Fix those, and you’ve just given Google a 40% “raise” in its budget to spend on your actual content. This is how you get new pages indexed faster.

How Do I Actually Get My Server Logs?

This is usually the first hurdle for beginners. The logs are just files, but they’re files sitting on your server, not your laptop. You have to go get them. How you do this depends entirely on your hosting setup.

What if I’m on Shared Hosting? (Like GoDaddy, Bluehost, etc.)

If you’re on a common shared hosting plan, you likely have a “cPanel.” This is your friend.

- Log in to your cPanel.

- Look for a section called “Metrics” or “Logs.”

- You should see an icon for “Raw Access Logs” or just “Access Logs.”

- It will usually give you an option to download the log file for your domain, often as a

.gzfile (which is just a compressed zip file).

Just download that file. That’s it. You’ve got your log.

What About VPS or Dedicated Servers?

If you have a Virtual Private Server (VPS) or a dedicated server, you have more power, which means things are a bit more complex. You (or your dev) will have “root access.” The logs are typically stored in a specific folder.

- For Apache servers (super common), they’re usually in

/var/log/apache2/ - For NGINX servers, they’re often in

/var/log/nginx/

You’ll need to use an FTP client (like FileZilla) or SSH (a command-line tool) to log in to your server and grab the access.log file.

What’s the Easiest Way for a Beginner to Do This?

Don’t be a hero.

Just ask your hosting provider.

Send a support ticket or open a live chat and say, “Hi, I need to get the raw server access logs for mydomain.com for the last 7 days. Can you please either show me where to download them or send them to me?” 99% of the time, they will happily do it. This is a standard request. Don’t waste hours playing server administrator if you don’t have to.

Okay, I Have a Giant .log File… Now What?

You did it. You have a file on your computer, probably named access.log or yourdomain.com.log.gz.

DO NOT TRY TO OPEN THIS IN EXCEL.

I have to put that in bold because every single one of us makes this mistake once. I still have nightmares about the time I tried to open a 5GB log file on my (then) brand-new MacBook. The fans spun up like a jet engine, the famous “spinning beachball of death” appeared, and I had to do a hard reboot.

A single day’s worth of logs for a medium-sized site can contain millions of lines. Excel can’t handle it. Even a plain text editor will crash. You need a specialized tool designed to “parse” (read and organize) these specific files.

Can I Just Use Excel?

No. Seriously. Please, trust me on this. Don’t do it.

What are the Go-To Log Analyzer Tools for SEOs?

For an SEO, you don’t just want to read the logs; you want to analyze them. You want to filter by bot, see status codes, and, most importantly, cross-reference the log data with a crawl of your own site. This is where a dedicated SEO log analyzer comes in.

Here are the industry-standard tools for the job:

- Screaming Frog SEO Spider & Log File Analyser: This is my personal workhorse. It’s a two-part system. First, you use the “SEO Spider” to crawl your site. Then, you use the “Log File Analyser” to import your log files. The magic happens when you combine them. The tool maps the log data to your crawl data, giving you a beautiful, easy-to-read dashboard. It’s not free, but it’s worth its weight in gold.

- Semrush: The log file analyzer from Semrush is another fantastic, premium option. It’s built right into their site audit tool and does a great job of integrating log data with other audit findings, all in a clean, web-based interface.

- JetOctopus: This is a powerful, cloud-based crawler and log analyzer. It’s built for scale and can handle massive websites, combining crawl, log, and GSC data in one platform. It’s more of an enterprise-level tool, but it’s incredibly powerful.

Are There Any Free Options to Get Started?

Yes! While the full version of Screaming Frog Log File Analyser requires a license, it has a free version that lets you analyze up to 1,000 log file lines.

For a small blog, this is perfect for getting your feet wet. You can download a single day’s log, run it through the free tool, and get a basic understanding of what’s happening. It’s a great way to learn without any financial commitment.

What’s the First Thing I Should Look For?

You have your logs. You have your tool. You’ve loaded the file. Now you’re looking at a dashboard. Where do you even begin?

The very first step, before anything else, is to isolate Googlebot. Your log file contains everything: your own visits, your customers, spambots, Bingbot, Ahrefsbot, and, somewhere in that mess, Googlebot. We need to filter out all the noise and focus only on what Google is doing.

Luckily, every good tool has a “User-Agent” filter. You’ll want to filter for Googlebot.

But wait. There’s a catch.

How Can I Be Sure It’s Really Googlebot?

Here’s a dirty little secret of the web: any bot can lie. A spambot can easily tell your server, “Hi, my User-Agent is Googlebot!” just to try and get past security. This is called “spoofing.”

So, how do you know you’re looking at the real Google? The answer is a Reverse DNS Lookup.

This sounds hyper-technical, but it’s simple.

- A DNS lookup turns a domain name (like

google.com) into an IP address (like172.217.14.228). - A Reverse DNS lookup does the opposite: it checks an IP address (like

66.249.66.1) to see what domain name it really belongs to.

All official Googlebot crawlers will have an IP address that resolves back to a domain ending in googlebot.com or google.com.

The good news? Every log analyzer tool I mentioned (Screaming Frog, Semrush, JetOctopus) does this for you automatically. They will “verify” the bots, so you can be confident that when you filter for “Verified Googlebot,” you are looking at the real deal.

What Are These “Status Codes” Telling Me?

Now we get to the gold. Once you’ve filtered for verified Googlebot, the next thing you must look at is the “Status Codes” report. This is a summary of your server’s replies to Google.

200 (OK): Is Google Crawling My Most Important Pages?

A 200 status code means “Success! Here is the page.” This is what you want to see. But the question isn’t “Am I getting 200s?” The question is “Am I getting 200s on the right pages?”

Your log file tool will show you a list of all URLs that returned a 200 code. Sort this list by “Number of Crawls.” Are your most important, high-converting product and service pages at the top? Or is Google spending all its time successfully crawling your “About Us” page and your blog’s privacy policy?

This report shows you Google’s priorities. If they don’t match your priorities, you have a problem. You may need to add more internal links to your money pages to signal their importance.

301 (Permanent Redirect): Are My Redirects Wasting Crawl Budget?

A 301 code means “This page has moved permanently. Go over there instead.” Redirects are a normal part of the web. But in your logs, you need to ask two questions:

- Is Google crawling my old 301s over and over again? If you migrated your site two years ago, Google should not still be hammering your old URLs. If it is, it means you have internal links on your own site still pointing to the old pages. You are forcing Google to take a detour every single time. Go find those internal links and update them to point directly to the new (200) page.

- Am I seeing redirect chains? This is when Page A 301s to Page B, which then 301s to Page C. This is a massive waste of crawl budget. Your log analyzer will spot these. You need to fix them so that Page A redirects directly to Page C.

304 (Not Modified): Is This a Good Thing or a Bad Thing?

This is the most misunderstood status code. 304 means “You’ve been here before, and the page hasn’t changed. Save your bandwidth. The version you have is still good.”

This is a great thing!

A high number of 304s is a sign of a healthy, well-configured site. It means your server is correctly telling Google, “Nope, nothing new here, save your time,” and Google can move on to other, more important pages. It improves your crawl budget. If you see a lot of 304s, give your developer a high-five. If you see very few, it might mean your server isn’t configured for caching properly, and Google is re-downloading your entire, unchanged site every day.

404 (Not Found): Where is Google Finding These Broken Links?

This is the big one. A 404 means “Someone asked for a page that doesn’t exist.” Google Search Console will report some of these. Your log file will show you all of them.

When you see Googlebot hitting 404s, the critical question is WHY? Where is Google finding these bad links? The log file’s “Referrer” column is your treasure map.

If the referrer is one of your own pages, it means you have a broken internal link. Go fix it. If the referrer is an external site, it means another site is linking to a broken page on your site. You should set up a 301 redirect from that broken URL to the most relevant live page to reclaim that “link juice.”

Without logs, you’re just guessing. With logs, you have a precise, actionable list of broken links that Google cares about (because it’s crawling them) and a map of where it’s finding them.

5xx (Server Errors): Is My Site Falling Over When Google Visits?

These are the red-alert, all-hands-on-deck emergencies. A 5xx error (like 500 Internal Server Error or 503 Service Unavailable) means your server is failing. It’s not just “page not found”; it’s “the server broke while trying to find the page.”

If Googlebot sees these, it’s a major negative signal. It tells Google your site is unstable and unreliable. If it sees them often enough, it will slow its crawl rate to a crawl (to avoid “hurting” your server) and may drop your pages from the index entirely.

Your GSC report will show these… eventually. Your log files show you the instant they happen. If you see a spike in 5xx errors, you don’t send a memo—you call your hosting provider or developer immediately.

How Do I Find Out Which Pages Google Never Crawls?

This is the report that pays for the software, right here. This is the single most powerful report you can generate.

You need to combine two data sets:

- A full list of all crawlable pages on your site. (You get this from your Screaming Frog crawl).

- A full list of all pages Googlebot crawled. (You get this from your log file).

Now, you just subtract one from the other.

[All My Pages] - [Pages Google Crawled] = [Pages Google Ignored]

These are your “Orphaned” pages (from Google’s perspective). These are pages that Google either doesn’t know about or doesn’t care about. This list is your most important SEO to-do list.

Why is Google ignoring them? 99% of the time, it’s because they have no, or very few, internal links. You have “money” pages sitting on your site that your own site doesn’t even link to. By identifying this list, you can build a new internal linking plan to point links to these pages, signaling to Google, “Hey! This page is important!”

What if Google Crawls Pages I Don’t Want it To?

This is the opposite problem, but it’s just as common. You look at your logs and see Googlebot spending thousands of requests crawling:

- Your search results pages (e.g.,

/?s=my-search) - Your tag pages or archive pages

- PDF files or old Word docs

- URLs with weird parameters (

?source=email&campaign=spring-sale)

These are all pages you likely have (or should have) a “noindex” tag on. You don’t want them in the index. But here’s the kicker: “noindex” does not stop Google from crawling them. It just stops them from indexing them. They still eat your crawl budget.

This is what your robots.txt file is for. By identifying these low-value, high-crawl URLs in your logs, you can go into your robots.txt file and add a Disallow: rule to block them. This tells Google, “Don’t even bother crawling this section,” which frees up that budget for your important pages.

Does Google Obey My robots.txt?

How do you know if Google is honoring your robots.txt file? You look at the logs!

If you Disallow: /search/ in your robots.txt, but your log files show Googlebot hitting those URLs 5,000 times a day, you have a clear signal. (Note: Google will still hit the URL to read the “noindex” tag if it’s not in robots.txt, but if it’s disallowed, the crawl should stop. Sometimes, it doesn’t).

This is also how you spot malicious bots. A bot that ignores your robots.txt file is almost certainly not one you want on your site.

How Can I See How My Site Changes Affect Google?

Log files are the ultimate feedback loop. As an SEO, I don’t “make a change” and “hope it works” anymore. I “make a change” and then “check the logs.”

Did My New Site Section Get Crawled?

You just launched a brand new “Services” section on your site. You submitted the new URLs in your sitemap. Is Google finding them?

Instead of waiting weeks for GSC to update, you can check your logs the next day. Filter for the URLs in that new /services/ directory. Are you seeing hits from Googlebot? If yes, congratulations! If no, you know you have a problem. Google isn’t finding them. You need to build more internal links from your homepage and other high-authority pages to get the bot’s attention.

How Does Mobile-First Indexing Look in the Logs?

We all know Google switched to “Mobile-First Indexing.” This means for the most part, Google is crawling your site using its smartphone user-agent, not its desktop one.

Is this true for your site? Check the logs.

Filter for Googlebot, and then look at the User-Agent report. You should see the vast majority of crawls (like, 90%+) coming from “Googlebot-Mobile” (the user-agent string will include “iPhone”). If you’re still seeing a majority of crawls from “Googlebot-Desktop,” it could be a sign that your site isn’t properly mobile-friendly, and Google hasn’t switched it over yet. This is a critical insight you can’t get anywhere else. For more details on this, check out Google’s official documentation on its crawlers.

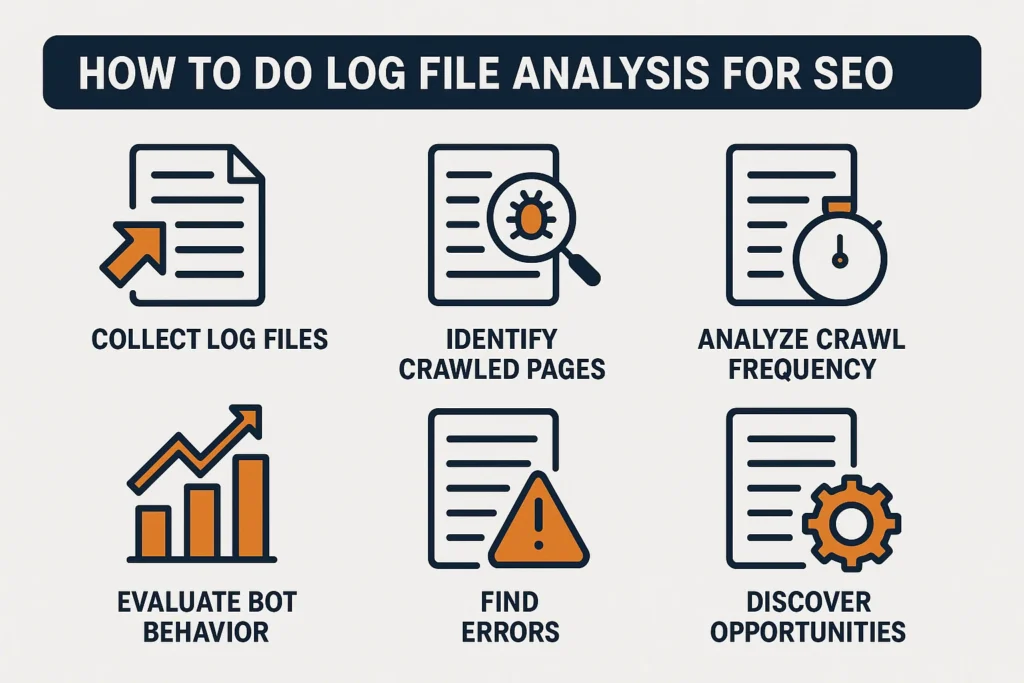

What’s a Simple Process I Can Follow?

Okay, that was a lot. Let’s boil it down to a simple, repeatable workflow that you can do once a month. This is my exact beginner process.

Step 1: Get the Logs

First, get your hands on the data. I recommend getting at least the last 7 days of logs to get a good baseline. 14 or 30 days is even better, but 7 is the minimum. Download the access.log files from your server or hosting provider.

Step 2: Load, Verify, and Filter

Load the log files into your chosen tool (like Screaming Frog Log File Analyser). Let it run the “Verify Bots” (Reverse DNS) process. Once it’s done, filter your entire report to show only “Verified Googlebot.” Now you’re ready.

Step 3: The Emergency Check (Status Codes)

This is your first stop. Look at the “Response Codes” dashboard.

- Any 5xx errors? If yes, this is an emergency. Investigate immediately.

- A high number of 404s? Go to the “404” report. Sort by “Crawl Hits.” Find the top 20 most-crawled broken pages. Find out why they’re being crawled (check referrers) and either fix the internal links or 301 redirect them to a live page.

Step 4: The Wasted Budget Check (Redirects)

Go to the “Redirects” (3xx) report.

- Are there any redirect chains? Fix them.

- Are your most-crawled redirects from old internal links? Hunt them down and update them.

Step 5: The “What’s Google Ignoring?” Check (Crawl vs. Logs)

This requires an extra step, but it’s the most valuable.

- Run a fresh crawl of your site with Screaming Frog SEO Spider.

- In the Log File Analyser, import that

.seospidercrawl file. - Go to the “Log File Data” tab and look at the “URLs Not in Log File” report.

- This is your list of uncrawled pages. Sort by your most important pages. Are any of your “money” pages on this list? If so, you need to build more internal links to them today.

Step 6: Make One Change.

Don’t try to fix everything. Pick one thing.

- “This week, I am going to fix the top 10 most-crawled 404 errors.”

- “This week, I am going to add 5 new internal links to my top uncrawled ‘services’ page.”

Make your change. Then, in two weeks, pull the logs again and see if your change worked. Did the 404 crawls stop? Did the “services” page finally get crawled? This is how you create a data-driven SEO feedback loop.

You Don’t Need to Be a Wizard. You Just Need to Be Curious.

Log file analysis feels like the final frontier of SEO. It has this reputation as a dark art, practiced only by the most technical wizards.

It’s not.

All it is, at its core, is reading a diary. It’s about trading your “I think…” and “GSC sort of looks like…” for “I know.”

You now know what a log file is. You know how to get it, what tools to use, and the first five things to look for. You are no longer guessing. The truth of your site’s relationship with Google is sitting on your server, waiting for you to look at it.

Go look.

FAQ

What are server logs and why are they important for SEO?

Server logs are detailed records of every request made to your server, including visitors, bots, and errors. They are crucial for SEO because they reveal exactly how search engines crawl your website, helping you identify issues like wasted crawl budget, broken links, and indexing problems.

How can I access my server logs if I am on shared hosting?

On shared hosting, you can typically access your server logs through your cPanel by navigating to the ‘Metrics’ or ‘Logs’ section and downloading the ‘Access Logs’ or ‘Raw Access Logs’ file, which is usually provided as a compressed .gz file.

What do the lines in a server log file mean?

Each line in a server log represents a request made to your server, detailing the visitor’s IP address, timestamp, requested URL, status code, referrer, and user agent, which helps analyze how search engines and users interact with your website.

Why is log file analysis more comprehensive than Google Search Console data?

Log files provide the complete, real-time record of all requests hitting your server, including every crawl, error, and resource, whereas Google Search Console offers sampled, delayed reports, making logs the most reliable source for detailed crawl analysis.

What is crawl budget and how does log file analysis help optimize it?

Crawl budget refers to the limited amount of time and resources Google allocates to crawl your website. Analyzing log files helps you understand how Google spends this budget, identify wasteful crawls, and implement improvements to ensure Google focuses on your most important pages.