There’s a feeling you only get once. Launching your first real website. I can still feel the knot in my stomach from years ago—that potent cocktail of pure pride and abject terror—when I pushed a client’s site live for the first time. You’ve poured yourself into it for weeks, maybe months. Every detail is perfect. The design, the words, the flow. You hit the big red button.

And then… nothing.

A little voice starts whispering from the back of your mind. How is anyone on this giant planet, let alone Google, supposed to find this thing? You hear tech folks talk about bots, spiders, and crawlers. It sounds like an invasion. How do you welcome the good guys and show the bouncers the door? How do you give them the five-star tour but keep them out of the messy back rooms?

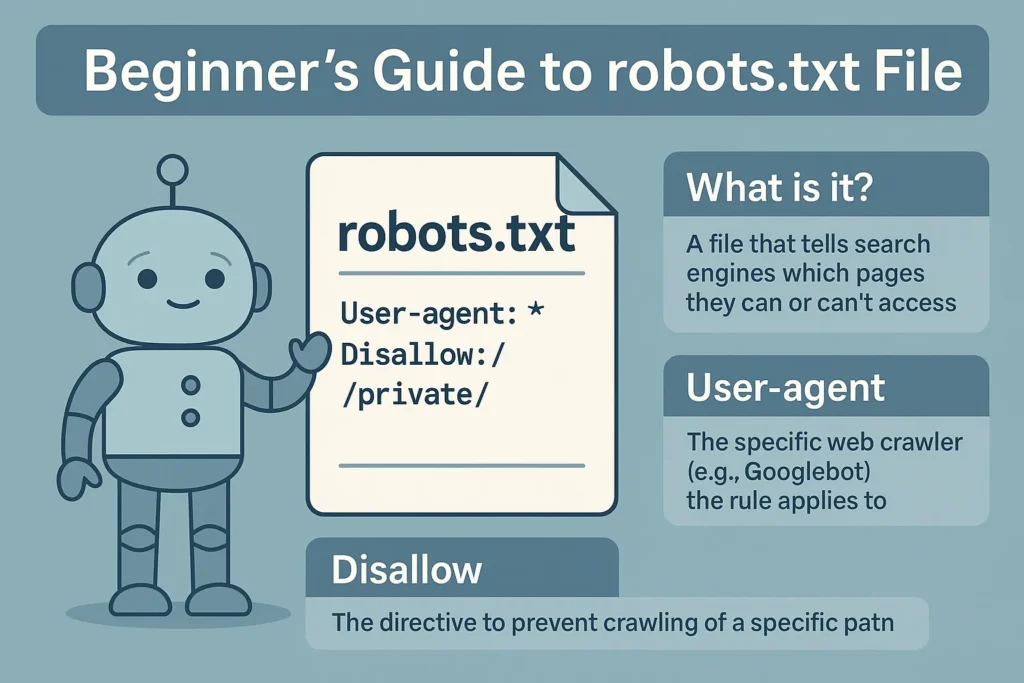

The answer is a deceptively simple, shockingly powerful little text file. This is the ultimate beginner’s guide to the robots.txt file, a true cornerstone of technical SEO.

Think of it as your website’s bouncer, traffic cop, and welcome mat, all rolled into one. Understanding it is the first major step you take from just having a website to truly managing one. Don’t worry, it’s not nearly as scary as it sounds. Let’s pull back the curtain together.

More in Technical SEO Category

Key Takeaways

- Your

robots.txtfile is just a simple rulebook for search engine crawlers, telling them where they can and can’t go on your site. - It must be a plain text file named

robots.txt—exactly that—and it has to live in your site’s main root folder (e.g.,yourwebsite.com/robots.txt). - Blocking a page here tells bots not to crawl it, but it won’t stop Google from indexing it if other sites link to it. For that, you need a different tool called a

noindextag. - Most of the time, you’ll use it to keep bots away from things like duplicate content, private admin areas, or messy internal search results.

- A word of warning: one tiny mistake in this file can have catastrophic consequences, like making your entire website invisible to search engines.

So, What Exactly Is This Robots.txt File I Keep Hearing About?

Let’s start from square one. Imagine your website is a museum you’ve just opened. The public galleries are pristine. But behind the scenes, you’ve got messy offices, cluttered storage rooms, and a chaotic workshop. When a tour guide (that’s our search engine bot) arrives, you don’t want them leading visitors into the workshop. You want to hand them a map. A map that clearly says, “Galleries this way. Staff-only areas are off-limits.”

That map is your robots.txt file.

It’s a plain text file sitting on your website that gives direct instructions to web robots—the crawlers and spiders that search the internet. It is the very first place these automated visitors look when they arrive at your domain.

Is It Like a Secret Code for Search Engines?

Not at all. It’s 100% public. You can peek at the robots.txt file for almost any major website by just typing their domain name and adding /robots.txt at the end. Go on, try it. Look at nytimes.com/robots.txt. You’ll see the exact rules they hand out to visiting bots.

The “language” it uses is part of the Robots Exclusion Protocol (REP). That’s just a geeky name for a simple standard that web crawlers agree to follow. It’s built on a few basic commands, mainly User-agent (the name of the bot) and Disallow (the command not to visit a URL). It’s less of a secret code and more of a polite, universal set of instructions. You’re simply saying, “Welcome! Glad you’re here. Just a few house rules before you get started.”

Why Should I Even Care About a Simple Text File?

It’s a fair question. SEO is already a tangled mess of Core Web Vitals, semantic search, and a hundred other buzzwords. Why get hung up on a simple text file? It all comes down to one word: efficiency.

Can’t Google Just Figure My Site Out on Its Own?

Yes and no. Google is incredibly smart, but it doesn’t have infinite resources. Every website is given a “crawl budget”—think of it as the amount of time and energy Google is willing to spend exploring your site on any given visit. If your site has thousands of pages, you want Googlebot spending that precious budget on your absolute best content. Your key service pages. Your brilliant blog posts. Your core products.

You do not want it wasting that time and energy on junk pages like these:

- Internal search results (

yoursite.com/?s=some-query) - Complex, filtered navigation pages from an e-commerce store

- Admin login screens or private dashboards

- Simple “thank you” pages that users see once

By using robots.txt to block these areas, you’re not being sneaky. You’re being a helpful host. You are personally guiding your most important guest—the Google crawler—directly to the good stuff. This focus helps your important pages get found, indexed, and ranked much, much faster.

What Happens If I Just Ignore It Completely?

If you don’t have a robots.txt file, crawlers will assume they have an all-access pass. They’ll try to follow every single link they find. For a tiny, five-page website, this might not be a big deal. But for anything larger, it can create real headaches.

Without guidance, bots can crawl and index pages you never meant for the public to see. A test page a developer forgot to delete. An internal PDF. It also means your crawl budget gets diluted across tons of unimportant URLs. Creating a robots.txt file, even a basic one, is a fundamental best practice. It’s a clear signal to search engines that you’re an engaged site owner who knows how the game is played.

Where Does This Robots.txt File Actually Live on My Site?

The location is non-negotiable. It has one home. And only one.

Do I Need a Special Folder for It?

Nope. In fact, it must be in no folder at all. Your robots.txt file has to live in the root directory of your website. Its address must be https://www.yourwebsite.com/robots.txt.

Why so strict? Because it’s the first stop for an automated crawler. They’re programmed to look in that one specific place, and they won’t waste a millisecond looking anywhere else. If it’s not there, they assume no rules exist and proceed to crawl everything. If you buried it in a subfolder like yourwebsite.com/blog/robots.txt, it would be completely ignored. Remember the golden rule: root directory only. Get this wrong, and the entire file is useless.

How Do I Write the Rules Inside a Robots.txt File?

Alright, let’s get our hands dirty. How do you actually write these instructions? The syntax is refreshingly simple.

What’s This “User-agent” and “Disallow” Stuff?

A robots.txt file is made of rule groups. Each group identifies the bot it’s for, then lists the rules.

- User-agent: This is just the name of the robot you’re talking to. Google’s main crawler is

Googlebot. Bing’s isBingbot. If you want to talk to all robots, use an asterisk (*) as a wildcard.User-agent: *is how most rule groups start. - Disallow: This command tells the bot to stay out of a specific URL path. The path is everything after your domain name. So,

Disallow: /private/tells a bot not to crawl any URL that starts withyourwebsite.com/private/. - Allow: This one is less common but can be a lifesaver. It overrides a

Disallowrule for a specific file or subfolder. For example, you could disallow an entire/media/folder but then useAllowfor one specific image inside it that you do want crawled. - Sitemap: This isn’t a crawling rule, but it’s incredibly helpful. You should use it to point crawlers directly to your XML sitemap. It’s like handing them a perfect map of all the pages you want them to visit.

Can You Give Me Some Real-World Examples?

Theory is one thing. Seeing it in action makes it stick.

Example 1: Blocking All Bots from Everything

User-agent: *

Disallow: /

That single forward slash / means your entire site. This tiny rule is the big red “emergency brake.” It tells all compliant bots to stay out. It’s perfect for a site that’s under construction.

And believe me, you have to be careful. A few years back, I was helping a client with a huge site migration. We had a staging server—a private copy of the new site—where we tested everything. Of course, we used Disallow: / in the robots.txt there. We didn’t want Google finding our half-finished, bug-riddled duplicate site. The launch day came. Everything went smoothly. We pointed the domain to the new server. It all looked perfect.

For three days.

Then the client called, audibly panicked. Their search traffic had fallen off a cliff. My stomach dropped. I raced through every diagnostic I could think of, and then I saw it. In the chaos of the launch, we had forgotten to remove the robots.txt file from the staging server. We had just launched a beautiful new site and told Google, “Don’t you dare look at this.” It was a humbling, sweat-drenched lesson in the power of two little lines of text. We fixed it instantly, but it took nearly a week for traffic to bounce back. Now, “CHECK THE ROBOTS.TXT” is the first and last item on every launch checklist I make.

Example 2: Allowing Everything

User-agent: *

Disallow:

When you leave the Disallow line blank, you’re signaling that the whole site is open for business. This is the default for many simple sites.

Example 3: Blocking Specific Folders

User-agent: *

Disallow: /admin/

Disallow: /scripts/

Disallow: /wp-content/plugins/

This is a more typical setup. You’re telling all bots to ignore your admin dashboard, a folder of scripts, and your WordPress plugins folder. They don’t belong in search results, and blocking them saves your crawl budget.

Example 4: Using Wildcards to Block File Types

User-agent: Googlebot

Disallow: /*.pdf$

This one’s a bit more advanced. The asterisk (*) is a wildcard for “any character,” and the dollar sign ($) means “the end of the URL.” So, this rule says: “Hey Googlebot, ignore any URL that ends with .pdf.”

Example 5: Pointing to Your Sitemap

User-agent: *

Disallow: /admin/

Sitemap: [https://www.yourwebsite.com/sitemap.xml](https://www.yourwebsite.com/sitemap.xml)

Sitemap: [https://www.yourwebsite.com/news_sitemap.xml](https://www.yourwebsite.com/news_sitemap.xml)

Here, we’ve combined a crawling rule with a Sitemap directive. You can list multiple sitemaps, which is handy for big sites.

What Are the Most Common Mistakes People Make With Robots.txt?

As my horror story shows, this simple file can cause major disasters. It’s a powerful tool; you need to know the pitfalls.

Can I Accidentally Make My Whole Site Invisible?

You absolutely can. It’s the number one mistake, and it’s painfully easy to make. Leaving a Disallow: / in place after launch is a catastrophic, self-inflicted wound. Always, always double-check your robots.txt after any major site change. Another sneaky but fatal error is a typo. File paths are case-sensitive. If your folder is /Images/ but you write Disallow: /images/, the rule will do nothing. Precision is everything.

Is Blocking a Page Here the Same as Making It Secret?

This is the biggest misconception about robots.txt. Let me be crystal clear: Disallowing a URL in robots.txt does not guarantee it won’t be indexed.

It’s a crawl directive, not an index directive.

So how can a “blocked” page still show up in Google? Simple. If another website links to your blocked page, Google will see the link and know that URL exists. Even though Googlebot will obey your robots.txt and not crawl the page, it might still put the URL in the search results. When this happens, the result looks weird. It will have the URL, but the title will say something like, “A description for this result is not available because of this site’s robots.txt.”

I saw this happen with a client who ran a membership site. They had a page with special pricing they “hid” with Disallow: /special-pricing/. They thought it was invisible. But a partner organization linked directly to that page in a public newsletter. A competitor found it. Suddenly, my client was fielding calls from angry customers demanding the special price. They were floored.

The lesson? robots.txt is a polite request. It’s a “Please Keep Out” sign on an unlocked door. If you truly need to keep a page out of search results, you must use a noindex meta tag in the page’s HTML. For truly sensitive content, you need to password-protect it on the server.

I’m Using a CMS like WordPress. Isn’t This Handled for Me?

Platforms like WordPress or Shopify certainly make life easier. They typically generate a default robots.txt file for you. SEO plugins like Yoast even give you a friendly interface for editing this file.

But that convenience can be a trap. It’s easy to accidentally check a box in your site settings that adds a Disallow rule you never intended. A common problem is blocking crawlers from the CSS or JavaScript files that make your site look and work right. If Google can’t render your page properly, it can’t understand it, and your rankings can plummet. Even with a CMS, you need to know what’s in your robots.txt and check it.

How Can I Check If My Robots.txt File Is Working Correctly?

So you’ve written your rules. Now what? You can’t just cross your fingers and hope for the best. Luckily, Google gives you a free and simple way to check your work.

Is There a Tool I Can Use to Test My Rules?

Yes, and you should get to know it well. The Robots.txt Tester in Google Search Console is your best friend for this. It shows you your robots.txt file exactly as Google sees it and lets you test URLs against your rules.

Here’s how to use it:

- Log into your Google Search Console account.

- You may need to search online for “Google Robots.txt Tester” as its location has moved in newer versions of Search Console.

- The tool automatically loads the live

robots.txtfile from your site. - At the bottom, there’s a text box. Type in any URL path from your site.

- Select which Google user-agent to test as.

- Hit the “TEST” button.

The tool gives you an instant verdict: Allowed in green or Blocked in red. It even highlights the exact line in your file that caused the block. Before you ever change your live file, paste your new rules into this tool and test them. It’s a risk-free safety net, as explained in the official Google Search Central documentation. Use it every time.

Should I Be Blocking AI Crawlers Like GPTBot?

This is a very new question, and it’s become a major debate. The explosion of generative AI has unleashed a whole new breed of web crawlers.

What’s the Deal with All These New AI Bots?

Companies like OpenAI (makers of ChatGPT), Google, and others are constantly hoovering up web data to train their Large Language Models (LLMs). To do this, they use their own crawlers. The main ones you might see are:

GPTBot(from OpenAI)Google-Extended(Google’s bot for its AI models)CCBot(from Common Crawl, a non-profit)

These bots aren’t crawling your content to rank it in search. They’re consuming it to use as training data for artificial intelligence.

What Are the Pros and Cons of Blocking Them?

This decision is entirely up to you. Smart people disagree.

The Argument for Blocking: A lot of creators are blocking these bots to protect their intellectual property. They’ve worked hard to create original content and don’t want it fed into an AI model that could then be used to generate competing content, often without credit. Others block them simply to reduce server load.

The Argument for Allowing: On the flip side, some believe being part of these AI datasets could be a good thing. Future AI tools might cite their sources, and if you’ve blocked them, you won’t be included. Allowing them ensures your knowledge is part of the foundation for these powerful new technologies.

If you do decide to block them, it’s easy. Just add a new rule group to your robots.txt file:

# Block OpenAI's GPTBot

User-agent: GPTBot

Disallow: /

# Block Google's AI Bot

User-agent: Google-Extended

Disallow: /FAQ

What is the purpose of a robots.txt file on a website?

The robots.txt file serves as a set of instructions for search engine crawlers, indicating which pages or sections of a website they are allowed or restricted from visiting, thus helping manage how the site is indexed.

How do you write rules inside a robots.txt file?

Rules in a robots.txt file are written using simple syntax with ‘User-agent’ to specify the bot, ‘Disallow’ to block specific pages or directories, and optional directives like ‘Allow’ and ‘Sitemap’ to further control crawling and provide site maps.

What are common mistakes made with robots.txt files?

Common mistakes include accidentally blocking the entire site with ‘Disallow: /’, which can make the site invisible to search engines, or typos in file paths that prevent rules from working as intended, potentially leading to unwanted pages being crawled or omitted.

Can blocking a page in robots.txt prevent it from appearing in search results?

Blocking a page in robots.txt only prevents it from being crawled, not necessarily from appearing in search results, especially if other sites link to it; for truly hiding a page, a noindex meta tag or password protection is recommended.