It’s a feeling every website owner knows. That little jolt in your stomach when you open Google Search Console first thing in the morning. You’re expecting to see your traffic graph climbing steadily, but instead, you’re met with a sudden, cliff-like drop. Or maybe it’s a list of angry red error messages that seems to be growing by the day.

Your heart sinks. It’s a moment of dread that sends you scrambling for answers, which is probably what brought you here. I’ve got good news for you: you’re in the right place. Those intimidating messages are just crawl errors, and as scary as they look, they’re almost always fixable. This guide is going to be your personal roadmap for figuring out how to fix crawl errors in Search Console, for good.

We’re going to tackle this together, one step at a time. No confusing jargon, no technical nonsense. Just a clear, straightforward plan to turn those red warnings into green signs of success.

More in Technical SEO Category

SEO Site Architecture Best Practices

What Is An XML Sitemap And How To Create One

Key Takeaways

- Don’t Panic, It’s Just a Blocked Path: A crawl error is simply Google’s web-crawler, Googlebot, telling you it tried to visit a page but couldn’t get in. It’s a super common problem that just needs a little attention.

- The “Pages” Report is Your New Basecamp: Forget the old “Crawl Errors” report; it’s long gone. All your work will now happen in the “Pages” report (what used to be the “Coverage” report) inside Search Console.

- Know Your Enemy: Each error is a different kind of puzzle. A Server Error (5xx) is totally different from a Not Found (404) or a Redirect Error, and each needs its own specific solution.

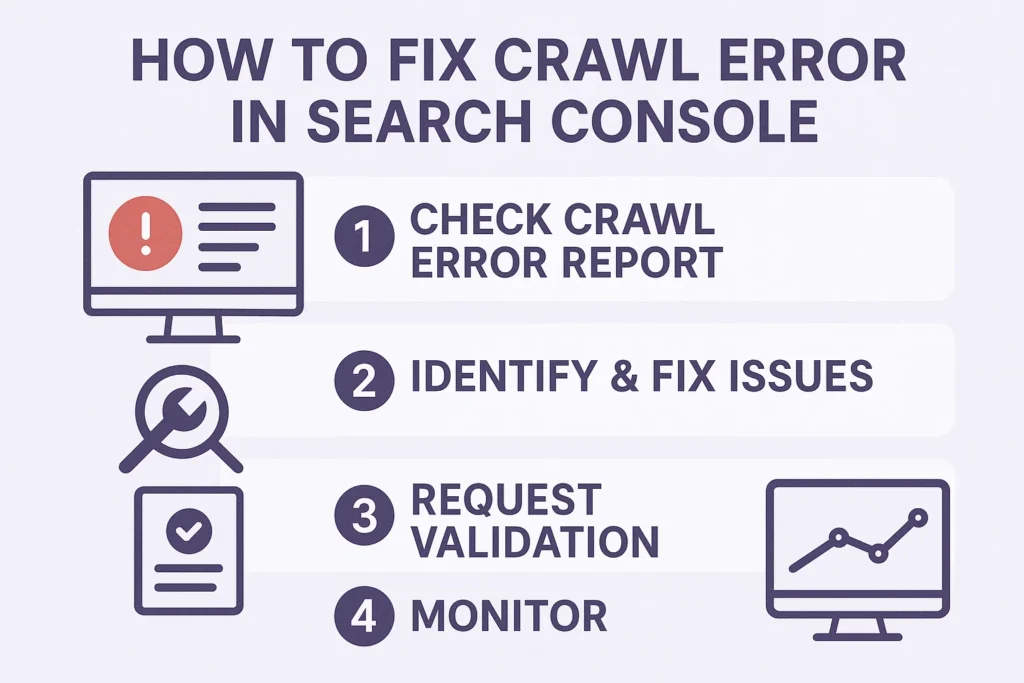

- The Fix is a Four-Step Dance: It’s always the same rhythm: Identify the bad URLs, figure out what’s actually wrong, apply the right fix, and then hit the “Validate Fix” button to let Google know you’re done.

- An Ounce of Prevention is Worth a Pound of Cure: The real goal is to stop errors before they even start. Making regular site checks and having a clear process for any website changes is your best strategy.

So, What Exactly Is a Crawl Error and Why Should I Care?

Before we start applying fixes, let’s get crystal clear on what we’re dealing with. Think of Google as having an infinite fleet of tiny robot explorers, called “Googlebots.” Their only job is to explore the entire internet, day and night, reading pages to figure out what they’re about. This journey is called “crawling.” These bots follow links from one page to the next, constantly drawing and redrawing a massive map of the online world.

A crawl error is simply one of those robot explorers hitting a brick wall.

It tried to follow a path to a URL on your site, but the path was broken. The page it was looking for was gone. The server, the very foundation of your site, didn’t even bother to answer the door.

Why does this matter so much? Because if Googlebot can’t get to your pages, it can’t read their content. If it can’t read the content, it has no idea what you’re talking about. And if it has no idea what you’re talking about, there’s zero chance it’s going to show your page to someone in the search results.

It really is that simple. Crawl errors are a direct roadblock between your valuable content and the people trying to find it. They can cause a cascade of problems:

- Indexing Nightmares: Your most crucial pages—the ones that make you money or build your brand—might never get added to Google’s library, making them totally invisible to searchers.

- A Leaky “Crawl Budget” Bucket: Google only has so much time and energy to spend exploring your site. This is your “crawl budget.” If its bots are constantly running into errors, they’re wasting that precious budget on dead ends instead of discovering your latest, greatest content.

- Frustrated Visitors: More often than not, whatever is blocking Googlebot is also creating a terrible experience for actual human beings, causing them to click the back button and likely never return.

Fixing these errors isn’t just about appeasing a search engine. It’s about making sure all the hard work you’ve poured into your website actually sees the light of day.

Before We Dive In, Where Did the Old Crawl Errors Report Go?

If you’ve been in the SEO game for a bit, you might be searching around for the old, familiar “Crawl Errors” report. I can save you the headache: it’s gone.

A while back, Google gave Search Console a major facelift and sent that old report into retirement. It caused a brief moment of panic in the community, but honestly, the change was a huge improvement. Instead of cordoning errors off in their own little section, Google integrated that data directly into what they first called the “Coverage” report. Now, it’s been streamlined even further and is simply named the “Pages” report.

This new way of doing things is infinitely more useful. It doesn’t just show you what’s broken; it gives you a complete health summary for every single URL Google knows about on your site. You can see what’s indexed, what’s not, and exactly why. It gives you the context you need to fix things intelligently.

How Can I Find These Pesky Crawl Errors in the “Pages” Report?

Alright, let’s get our hands dirty. Finding the list of errors is the first and easiest part of the job.

- Log into your Google Search Console account.

- On the left-hand navigation menu, look for the “Indexing” section and click on “Pages.”

- You’ll see a graph up top. Ignore that for now and scroll down just a little bit.

- You’ll come to a summary table titled “Why pages aren’t indexed.”

This is it. This is your command center. This table lists every reason Google has for not including a page from your site in its index. Some of these reasons are perfectly fine (like pages you’ve intentionally blocked), but this is also where the real, problem-causing errors are hiding. They’ll be clearly labeled with descriptions like “Error” or “Not found (404).” When you click on any of these reasons, you’ll get a full, detailed report showing every single URL suffering from that specific problem.

What Are the Most Common Types of Errors and What Do They Mean?

Once you click into a report, you’ll be face-to-face with the errors. To fix them, you first have to understand them. Let’s break down the usual suspects.

Is a “Server Error (5xx)” My Fault or My Host’s?

Seeing a “Server Error (5xx)” is unsettling because it sounds like the whole house is on fire. And it’s not far off. A 5xx error means Googlebot sent a perfectly reasonable request to your website’s server, but the server itself failed. It’s not that the page was missing; it’s that the entire server was too sick or overwhelmed to even handle the request.

The most common flavors are the 500 Internal Server Error and the 503 Service Unavailable. This isn’t a Google problem; it’s a you problem. The issue is almost always with your server, your hosting, or your site’s underlying code. Common causes include:

- Your server is getting hammered with more traffic than it can handle.

- You’re on a cheap hosting plan that just can’t keep up.

- A developer pushed a bad code update, or you installed a plugin that’s causing chaos.

- Your hosting company is performing maintenance.

I learned this lesson in the most stressful way possible. Years ago, I was managing a huge e-commerce launch. The marketing campaign was a massive success, and traffic was pouring in. But the client was on a bargain-bin hosting plan. The server buckled under the pressure and started spitting out 503 errors. The site was down. Right at our biggest moment. It was an expensive, painful masterclass on the importance of quality hosting. If you see 5xx errors, your very first action is to contact your developer or your hosting provider.

What’s the Deal with “Redirect Error”?

Redirects are your friend. They let you send users and search engines from an old URL to a new one, which is a vital part of managing a website. A redirect error, however, is when this friendly guidance system turns into a confusing mess.

These errors typically pop up in a few scenarios:

- The Never-Ending Detour: Googlebot will only follow a certain number of redirects in a sequence (usually about five). If you have a chain where Page A points to B, B to C, C to D, and so on, Googlebot will eventually just give up and go home.

- The Infinite Loop: This is when you accidentally create a circle. Page A redirects to Page B, but something on your site is redirecting Page B right back to Page A. Googlebot gets stuck in this loop forever and flags an error.

- A Road to Nowhere: The redirect is set up, but the destination URL at the end of the chain is broken or doesn’t exist.

Imagine giving a friend directions that just lead them around the same block over and over. They wouldn’t stick around for long. Neither will Googlebot.

Why Does It Say “Submitted URL Blocked by robots.txt”?

This is a classic case of sending mixed messages. Your robots.txt is a simple text file on your server that acts as a bouncer, telling search engine crawlers which parts of your site they aren’t allowed to enter.

This specific error means you’ve done two things that directly contradict each other:

- You put a URL in your XML sitemap. The sitemap is a list you give to Google that says, “Here are my most important pages! Please crawl them!”

- You then created a rule in your

robots.txtfile that says, “Hey Google, you are not allowed to crawl this exact same page.”

Google gets confused. You’re pointing at the door and yelling “Come in!” while also standing in the doorway with your arms crossed saying “You can’t enter.” You have to choose one.

What Does “Submitted URL Marked ‘noindex’” Actually Mean?

This is another mixed-signal error, almost identical to the robots.txt issue. Besides using robots.txt to block crawling, you can also use a special meta tag in a page’s HTML to block indexing. That tag looks like this: <meta name="robots" content="noindex">.

This tag is a very clear instruction to Google that says, “You are free to crawl and look at this page, but you are forbidden from ever showing it in your search results.”

The error happens when you tell Google to index the page (by putting it in your sitemap) while the page itself is telling Google not to index it. It’s another contradiction that needs to be resolved.

Help! What is a “Soft 404” and How is it Different From a Real One?

This is probably the most confusing error on the list, but it’s critical to understand. A “real” 404 error (which we’ll get to next) is the correct and proper way to handle a missing page. A request comes in for a page that doesn’t exist, and your server correctly responds with the 404 “Not Found” status code.

A “Soft 404” is a lie. It’s when a request comes in for a page that doesn’t exist, but your server pretends that everything is fine. It returns a 200 “OK” status code, even though the page content might say something like “Sorry, we couldn’t find what you were looking for.”

This is bad because it confuses Google. Google thinks it has found a real page, so it wastes time crawling and indexing it. This can lead to Google indexing thousands of thin, useless, “not really here” pages, which wastes your crawl budget and can make your whole site look low-quality.

I once worked with a huge used car website that had this problem at a massive scale. They had pages for every car they had ever sold. Instead of letting these pages 404, they just showed a “This car is sold” message but served a 200 status code. They had hundreds of thousands of Soft 404s. Google was spending all its time crawling these sold-car pages instead of their new inventory. Fixing that one issue had a dramatic, overnight impact on their business.

And What About the Classic “Not Found (404)” Error?

Finally, the error that everyone has heard of. The 404. This one is simple. It means a user or Googlebot tried to go to a URL, and there was nothing there. The server looked, but the page was gone.

This happens all the time for perfectly normal reasons:

- You deleted an old blog post.

- You changed a URL and forgot to redirect the old one.

- Another website linked to you but made a typo in the URL.

- You have a broken link somewhere on your own website.

Here is the single most important thing to know about 404s: it is 100% normal and okay to have 404 errors. The internet is a messy place. You don’t need to have a panic attack and try to fix every single one. The key is to prioritize. Is your most important, highest-converting service page showing a 404? That’s a five-alarm fire. Is it some bizarre, garbled URL you’ve never seen before that got one hit? Let it go.

Okay, I’ve Found My Errors. How Do I Actually Fix Them? (The Step-by-Step Guide)

You’ve done your homework. You know what the errors are. Now it’s time to actually fix them. The process is the same no matter what you’re tackling.

Step 1: Analyze the URL List

Click on an error type in Search Console to see the list of affected URLs. Don’t just glance at it; really dig in. For each URL, ask yourself:

- What is this page supposed to be? Is it a critical service page, a blog post, or some weird, old archive page from 2008?

- Should this URL even work? Is it perhaps a leftover from an old version of your website that you don’t need anymore?

- Do I see a pattern? Are all the 404 errors in your

/blog/section? Are all the server errors happening on product pages? A pattern suggests a single, systemic problem rather than a bunch of individual ones.

This analysis is triage. It helps you figure out what’s a paper cut and what’s a gaping wound.

Step 2: Investigate the Root Cause

For any single URL, Search Console gives you a powerful diagnostic tool. Hover over a URL in the error list and click the little magnifying glass icon. This will open the URL Inspection Tool.

This tool is your new best friend. It shows you the world from Googlebot’s perspective. It will tell you:

- The referring page (where Googlebot found the link to the broken page).

- The last time Google tried to crawl it.

- If the page is listed in your sitemap.

- A screenshot of what Google sees when it tries to load the page.

That “referring page” information is pure gold. It often tells you exactly where the problem started.

Step 3: Implement the Fix (Tailored to Each Error)

Now you put your detective work into action. Your fix will depend on the specific error you’re tackling.

How to Fix Server Errors (5xx):

- Contact Your Host Immediately: This is your first step. Give them the list of URLs and tell them what’s happening. They can look at server logs and often find the problem quickly.

- Retrace Your Steps: Did this start happening right after you installed a new plugin or updated your website’s theme? Try temporarily deactivating it to see if the error goes away.

- Check Your Vitals: Look at the CPU and memory usage in your hosting dashboard. If they are constantly hitting 100%, you’ve outgrown your plan. It’s time for an upgrade.

How to Fix Redirect Errors:

- Map the Journey: Use a free online redirect checker tool. Just paste in the URL, and it will show you every single “hop” in the redirect chain.

- Find the Loop or Break: The tool will make it obvious where the chain is looping back on itself or where it’s just too long.

- Fix the Rule: Go into your server’s

.htaccessfile or your website’s redirect management plugin and correct the faulty rule. Your goal should always be a single redirect from the old URL to the new one.

How to Fix robots.txt Blocks:

- Make a Decision: Simple question: do you want Google to index this page or not?

- If you want it indexed: Open your

robots.txtfile (atyourdomain.com/robots.txt). Find theDisallow:rule that’s blocking the URL and simply delete that line. - If you don’t want it indexed: The

robots.txtrule is correct, but the URL shouldn’t be in your sitemap. Remove it from your sitemap file.

How to Fix noindex Errors:

- Again, Make a Decision: To index, or not to index? That is the question.

- If you want it indexed: You have to hunt down and remove the

noindextag. View the page’s HTML source and look for<meta name="robots" content="noindex">. The setting to remove this is usually a checkbox in your SEO plugin (like Yoast or Rank Math) on that specific page’s editing screen. - If you don’t want it indexed: The page is correctly marked

noindex, so it shouldn’t be in your sitemap. Remove it.

How to Fix Soft 404s:

- If the content is truly gone forever: The page needs to return a real 404 (Not Found) or 410 (Gone) status code. This tells Google to stop bothering with the page and remove it from the index.

- If the content has a logical replacement: Use a permanent 301 redirect. For instance, if a product is out of stock, redirect the page to its main category page. This often requires a developer to fix properly.

How to Fix Not Found (404) Errors:

- Triage that list. Which pages are most important? Look for pages that have links from other websites or pages that used to get a lot of traffic. Fix those first.

- For your high-priority 404s:

- If the page just moved, set up a 301 redirect to its new URL.

- If you deleted the page on purpose, redirect the old URL to the next most relevant page you have. This could be its parent category, a related blog post, or, as a last resort, your homepage.

- For low-priority 404s: Seriously, just ignore them. Don’t waste your time.

I’ve Fixed the Error. How Do I Tell Google I’m Done?

You did it. You found the problem and put the fix in place. There’s just one last step: you have to tell Google to come and check your work.

Go back to the specific error report in the “Pages” section. At the very top, there’s a big, can’t-miss-it button that says “Validate Fix.”

Click that button.

This sends a notification to Google that says, “Hey, I think I’ve sorted out the issues with these URLs. Can you please come take another look?”

Google will then kick off a validation process. This can take anywhere from a few days to a couple of weeks. It will slowly re-crawl all the URLs from your error list. As it confirms each fix, you’ll see your list of pending URLs get smaller and smaller. Finally, you’ll get a wonderful email from Google confirming that the issues have been successfully fixed.

It’s one of the best emails an SEO can get.

Can I Prevent These Errors from Happening in the First Place?

Putting out fires is exhausting. It’s much better to fireproof the house. Being proactive instead of reactive will save you a ton of time and prevent traffic-killing problems down the road.

- Perform Monthly Site Audits: Once a month, crawl your own website with a tool like Screaming Frog. It acts like your own private Googlebot and will find broken links and redirect problems before Google even notices them.

- Create a “Page Deletion” Checklist: Make a simple, mandatory rule for your team. No one is allowed to delete a page or change a URL without following the checklist. And what’s step one on that checklist? “Set up a 301 redirect from the old URL.” This one habit will prevent most of your future 404s.

- Have a Weekly Coffee with Search Console: Don’t wait for a disaster to log in. I spend 10 minutes every Monday morning just looking at the “Pages” report for my clients. It lets me spot weird trends and fix tiny issues before they become huge headaches. If you want to go deeper, Google’s own official documentation on the Pages report is the best place to learn.

Your Errors Are Opportunities in Disguise

Let’s be honest, staring at a list of technical errors can feel like a drag. It can feel like you’ve done something wrong. I want you to completely flip that mindset.

Every single error is an opportunity for improvement.

A 404 is an opportunity to find a broken link and redirect that authority to a page that matters. A server error is an opportunity to make your site faster for everyone. A soft 404 is an opportunity to tidy up your site’s structure and help Google focus on your best content.

When you fix crawl errors, you’re not just cleaning up a mess. You are fundamentally strengthening your website’s foundation. You’re making it easier for search engines and for users. You are clearing the path so that your brilliant content, your amazing products, and your important message can be discovered by the people who need them most. So go on, open that “Pages” report. You’re not just fixing errors; you’re building a better, stronger website.

A strong backlink profile is essential. Let our team build yours. Get in touch today.

FAQ – How To Fix Crawl Errors In Search Console

What is a crawl error and why is it important to fix it?

A crawl error occurs when Google’s web-crawler, Googlebot, encounters a problem accessing a page on your site. Fixing crawl errors is crucial because they prevent Google from properly indexing your pages, which can reduce your visibility in search results and negatively impact your website’s traffic.

Where can I find crawl errors in Google Search Console?

You can find crawl errors in the ‘Pages’ report within Search Console, formerly known as the ‘Coverage’ report. This section lists all URLs with issues, including detailed reasons for the errors, which helps you identify and prioritize fixes.

What are the main types of crawl errors and their meanings?

Main crawl errors include Server Errors (5xx), which indicate server issues; Redirect Errors, caused by redirect chains or loops; ‘Submitted URL blocked by robots.txt,’ meaning crawl restrictions are set; ‘Submitted URL marked ‘noindex’,’ indicating pages are intentionally not indexed; Soft 404s, false errors where non-existent pages return a ‘200 OK’ status; and actual 404 errors, which denote missing pages.

How should I approach fixing different types of crawl errors?

To fix crawl errors, analyze affected URLs to understand their purpose, investigate root causes using tools like URL Inspection, and implement specific solutions such as resolving server issues, correcting redirect chains, updating robots.txt rules, removing or fixing noindex tags, or setting up proper redirects for 404s. Afterwards, use the ‘Validate Fix’ button to notify Google.

How can I prevent crawl errors before they happen?

Preventive measures include performing regular site audits with tools like Screaming Frog, establishing a protocol for site changes including setting up 301 redirects, and routinely checking Search Console reports to catch issues early. Using these strategies helps maintain website health and reduce unexpected crawl errors.