I’ll never forget my first massive e-commerce client. We’re talking millions of product variations, a sitemap that looked like a spiderweb, and a huge problem: their most profitable, newly-launched products just weren’t getting indexed. They were invisible to Google. Meanwhile, Googlebot was spending thousands of hits per day crawling expired promotion pages from 2017 and URL parameters for “size medium, color blue, on-sale” for a product that had been out of stock for months.

It was a nightmare.

That was my trial-by-fire introduction to the critical, and often overlooked, world of crawl budget. You can have the best content and the most powerful backlinks, but if Googlebot can’t—or won’t—find your important pages, you simply don’t exist. Learning how to optimize your crawl budget isn’t just a technical exercise; it’s the master key to unlocking your site’s true potential, especially for large, complex websites. This is the stuff that really separates the pros from the amateurs.

More in Technical SEO Category

How To Fix Crawl Errors In Search Console

SEO Checklist For Site Migration

Key Takeaways

- Your Budget is Finite: Google doesn’t have unlimited time. It gives your site a set amount of resources based on its health (Crawl Rate) and how important it thinks you are (Crawl Demand).

- Don’t Obsess (If You’re Small): Got fewer than 10,000 pages? This probably isn’t your biggest fire. Go focus on content and links.

- Server Logs are the Truth: Google Search Console’s Crawl Stats report is a nice summary. Your server logs are the raw, unfiltered truth of what Googlebot is actually doing.

- Pruning ‘Zombie Pages’ Works: One of the fastest ways to fix your budget is to get rid of low-value, thin “zombie pages” that are eating up crawls.

- The Technical Goblins: URL parameters, messy navigation, redirect chains, and 5xx server errors are the biggest budget killers. For large sites, fixing them isn’t optional.

- You’re the Guide: A clean

robots.txt, a tight XML sitemap, and smart internal linking are how you tell Google exactly where to go—and what to ignore.

So, What Exactly Is This “Crawl Budget” Everyone’s Talking About?

Think of it this way: crawl budget is just the number of pages Googlebot will crawl on your site on any given day. It’s not one single, fixed number. Instead, Google calculates it based on two core factors.

First, you’ve got the Crawl Rate Limit. This is purely technical. Googlebot tries to be a good web citizen; it doesn’t want to crash your server by crawling too aggressively. It self-adjusts based on your site’s health. If your server responds lightning-fast with 200 OK statuses, Google might crawl more. If your server starts throwing 5xx errors or slows to a crawl, Googlebot will slam the brakes.

Then, there’s Crawl Demand. This is all about value. Google is constantly asking: how popular and how fresh is this site? A site like The New York Times, which publishes hundreds of new, in-demand articles daily, has an incredibly high crawl demand. Google wants to find that new content instantly. A small business blog that hasn’t been updated in six months? It has very low crawl demand.

Your total crawl budget is simply the combination of these two things: what your server can handle (rate) and what Google thinks is worth finding (demand).

Does My Little Blog Really Need to Worry About Crawl Budget?

Honestly? Probably not.

This topic gets a ton of airtime, and I constantly see new site owners with 50 blog posts stressing about it. If your site is small—let’s say, under 10,000 pages—and you publish new content every so often, Google will almost certainly find and index your pages just fine. Your server can handle the load, and the demand is easily met.

You should spend your time creating amazing content and building high-quality links. That’s it.

So, who should be obsessed with this?

- Large E-commerce Sites: This is the big one. My client with the faceted navigation nightmare is the poster child for this. When filters for size, color, brand, and price create billions of potential URL combinations, you have a massive crawl budget problem.

- International Sites: If you’re using

hreflangto manage multiple versions of your site for different countries and languages (e.g., /en-us/, /en-gb/, /fr-ca/), your page count multiplies. You need to ensure Google can find all these variations efficiently. - News & Publisher Sites: When you publish dozens or hundreds of articles a day, you need to ensure they get crawled and indexed now, not next week.

- Sites with Lots of User-Generated Content: Think forums, social networks, or sites with millions of user profiles. These can quickly spiral out of control.

If you fit into one of these buckets, welcome to the club. Let’s get to work.

How Can I Find Out What Google Is Actually Doing on My Site?

You can’t fix a problem you can’t see. Before you touch a single line of code or robots.txt, you have to become a detective. Your first stop is easy, but your second stop is where the real gold is.

Is Google Search Console Lying to Me?

No, it’s not lying… but it is summarizing.

The “Crawl Stats” report in Google Search Console (under Settings) is your high-level dashboard. It’s fantastic. It gives you a 90-day history of Google’s activity, showing total crawl requests, total download size, and average response time.

More importantly, it breaks down crawls by:

- Response Code: Are you serving a ton of 404s (Not Found) or 5xx (Server Errors)? This is a red flag.

- File Type: Is Google wasting time crawling your CSS and JS files instead of your HTML?

- Purpose: Is Googlebot discovering new pages or refreshing known ones?

- Bot Type: Are you seeing hits from Googlebot Smartphone or Googlebot Desktop?

This report is your go-to health check. If you see a big spike in 5xx errors or a sudden drop in crawl requests, you know something is technically wrong.

Are You Brave Enough to Look at Your Server Logs?

This is it. This is the real secret weapon for understanding crawl budget. GSC gives you the summary; server logs give you the line-by-line, unvarnished truth.

Your web server (like Apache or Nginx) logs every single request it receives. This includes requests from users, spam bots, and, most importantly, Googlebot. Analyzing these logs is like watching a replay of exactly what Google did, minute by minute.

To do this, you (or your developer) need to get access to your raw server logs. Then, you can use a tool like Screaming Frog’s Log File Analyser to make sense of them.

When I crack open a log file, I’m hunting for:

- Real Googlebot Hits: What’s the total number of times Googlebot hit my site? How does it stack up against GSC?

- Most Crawled URLs: Is Google spending 80% of its time on my “About Us” page or my faceted navigation parameters?

- Uncrawled URLs: What important pages are missing from this log file? This tells me Google isn’t finding them.

- Status Codes: What response codes is Google actually receiving? I often find redirect chains (301s) and server errors (500s) that GSC missed.

- Crawl Frequency by Directory: Is Google correctly prioritizing my

/blog/and/products/directories, or is it lost in a/user/profile/black hole?

For my e-commerce client, the log files were the smoking gun. They showed, clear as day, that Googlebot was spending 90% of its crawl budget on URLs containing ?size= and ?color=. This data was the evidence I needed to get the development team to act.

How Do I Make Google Want to Visit My Site More Often?

Your goal here is to boost that “Crawl Demand” we talked about. You need to send strong signals to Google that your site is a high-value, active, and fresh resource.

Is Your Content Still Fresh, or Is It Gathering Dust?

Google prioritizes freshness. It’s constantly looking for new and updated information. If your site is static and hasn’t changed in a year, Google will learn that it doesn’t need to visit very often. Why would it?

You can increase crawl demand by:

- Publishing new content: This is the most obvious one. A regular publishing cadence trains Google to come back more often.

- Updating old content: This is my favorite. Go back to an old, high-performing article. Add new sections, update statistics, fix broken links, and add new images. When Google sees that the content has been substantially improved, it re-evaluates it and signals that your site is being actively maintained.

And I’m not talking about changing a comma and hitting “update.” This has to be a genuine, valuable improvement.

Are You Accidentally Hosting a “Zombie Page” Apocalypse?

On the flip side of freshness, you’ve got “zombie pages.” These are the low-value, thin, “dead” content cluttering up your site.

Think of pages like:

- Old, thin blog posts with no traffic.

- Tag or category pages with only one or two posts.

- Old user profiles that were never filled out.

- Archive pages for a specific day in 2015.

These pages are leeches. They provide no value to users, get no traffic, and attract no links. But Google still has to crawl them. They eat your crawl budget and dilute your site’s overall “quality” score.

The fix is a content audit. Identify these zombie pages (low traffic, no links, thin content) and then “prune” them. You have three options:

- Improve It: If the topic is good but the content is weak, rewrite and improve it.

noindexIt: If the page is necessary for users but not for search (like some archive pages), add anoindextag. Google will still crawl it (which wastes some budget), but it won’t index it.- Delete It (and 410 It): If the page is pure junk, delete it and have your server return a

410 Gonestatus. This is a clear signal to Google that the page is gone forever and it should be removed from the index and not crawled again. A 404 works too, but 410 is more permanent.

Pruning low-quality content is one of the most powerful ways to focus your crawl budget on the pages that actually matter.

Is My Website’s “Front Door” Jammed Shut?

Now we shift to the technical side: the “Crawl Rate Limit.” Is your site fast, healthy, and easy for Googlebot to access? Or is it a slow, error-filled maze?

How Fast is Too Slow for Googlebot?

Users hate slow sites, and so does Googlebot. The key metric here isn’t just “page load time,” but your server response time, often called Time to First Byte (TTFB). This is how long it takes your server to even begin sending information after it gets a request.

If Googlebot hits your server and has to wait two seconds just to get a “hello” back, it’s going to slow down. It assumes your server is overloaded and will reduce its crawl rate to avoid breaking it.

A slow TTFB almost always points back to your hosting. Cheap, shared hosting might save you $10 a month, but it could be costing you thousands in lost indexing and rankings. A fast server with a good TTFB (under 300ms) gives Google the green light to crawl more aggressively.

Of course, your overall PageSpeed (LCP, CLS, etc.) matters for users and ranking, but for crawl budget, the server’s initial response is king.

Are You Serving Google a Bunch of Error Codes?

Every time Googlebot requests a page, it expects a 200 OK status. If it gets something else, it’s a signal.

5xx Server Errors (like 500 or 503): This is the emergency brake. A 5xx error means “your server is broken.” When Googlebot sees these, it will dramatically reduce its crawl rate, or even stop crawling altogether for a while. A high number of 5xx errors is the most critical crawl budget problem you can have. You must fix the underlying server issue immediately.

404 Not Found Errors: These aren’t as critical as 5xx errors, but they’re still a waste. Every time Google crawls a 404 page, it’s a request that could have been spent on a real, valuable page. Having some 404s is normal. Having thousands of them (especially if they’re linked internally) is a sign of a messy site, and it will slowly drain your budget.

How Do I Stop Google from Wasting Time in the “Junk Rooms” of My Site?

This is where you take the steering wheel. You need to put up signs that tell Googlebot, “Crawl here, don’t crawl there.”

Is Your robots.txt File Actually Doing Its Job?

Your robots.txt file, which lives in your site’s root directory (e.g., yoursite.com/robots.txt), is your bouncer. It’s the very first thing Googlebot checks before crawling any other page.

Its job is to tell bots which directories or URLs to Disallow (ignore). This is your number one tool for saving crawl budget.

What should you be blocking?

- Faceted Navigation Parameters: This is the big one for e-commerce. You want Google to crawl

/shoes/but not/shoes/?color=red&size=10. You’d add a line like:Disallow: *?color= - Internal Search Results: Never let Google index your site’s own search results. They are low-quality, often duplicate, and a potential black hole. Add:

Disallow: /search/ - Admin, Login, and Cart Pages: Google doesn’t need to crawl your login page, user carts, or checkout process. Block them:

Disallow: /wp-admin/Disallow: /cart/Disallow: /my-account/

A clean robots.txt is the foundation of good crawl hygiene.

Are noindex and robots.txt the Same Thing? (A Costly Mistake)

This is a critical distinction that trips so many people up.

Disallowinrobots.txt: This tells Google, “Do not even look at this page.” Googlebot never requests the URL. This saves crawl budget.noindexMeta Tag: This tag is in the<head>of an HTML page. It tells Google, “You can look at this page, but don’t show it in your search results.”

Here’s the catch: for Google to see the noindex tag, it must first crawl the page.

This means noindex does not save you crawl budget.

Use robots.txt for sections you don’t want Google to ever see (like parameters). Use noindex for pages you want Google to see and understand (perhaps to pass link equity) but not to index (like a “thank you” page or a thin author archive).

Warning: Never, ever block a page in robots.txt and add a noindex tag to it. If the page is blocked, Google will never see the noindex tag, and the page might stay in the index (as just a URL) forever.

Are You Sending Googlebot on a Wild Goose Chase?

Your site’s architecture is the GPS for Googlebot. A clean, logical structure leads it directly to your treasures. A messy one sends it down dead-end roads and into endless loops.

How Many Clicks Does It Take to Get to Your Best Content?

This concept is called “link depth.” How many clicks from the homepage does it take to reach a specific page?

If your most important product page is 20 clicks deep, Google will assume it’s unimportant. Pages linked directly from your homepage, main navigation, or footer are seen as the most important and are crawled most frequently.

Your internal linking is your primary way of telling Google what’s important. Your key pages should be “shallow”—no more than 2-3 clicks from the homepage. If you have “orphaned pages” (pages with no internal links pointing to them), Google will almost certainly never find them.

Are Your Redirects Sending Bots in a Never-Ending Loop?

Redirects (like a 301) are a normal part of the web. But “redirect chains” are a serious crawl budget killer.

A redirect chain is when: Page A -> 301 redirects to Page B Page B -> 301 redirects to Page C Page C -> 301 redirects to Page D

Googlebot will follow these, but it’s a massive waste of time and resources. After a few hops, it will just give up. Every internal link on your site should point directly to the final, 200 OK version of a page. Go audit your site and fix these chains.

What’s the Real Deal with nofollow?

The nofollow link attribute (rel="nofollow") tells Google, “Don’t follow this specific link.” It was originally designed for user-generated content and paid links, but it has a strategic internal use, too.

If you have links on every page that you really don’t want Google wasting time on (like a “login” or “register” link in your header), you can add a nofollow to them.

This “PageRank sculpting” is an old-school technique, and Google has said it’s not as effective as it used to be. However, it can still be a useful micro-optimization for telling Google, “This link is not important for crawling or ranking.” I use it sparingly, but it’s another tool in the toolbox.

Is Your Site Map a Treasure Map or a Labyrinth?

Your XML sitemap is supposed to be your clean, curated “map” that you hand directly to Google. It says, “Here are all the pages I want you to index.”

And yet, so many are a complete mess.

Why Is My Sitemap Full of Pages I Don’t Want Indexed?

This is, by far, the most common mistake I see. People use a plugin that automatically generates a sitemap and includes every single URL on the site. This means your sitemap is full of 404 pages, redirected pages, noindexed pages, and canonicalized pages.

This defeats the entire purpose! You’re handing Google a map full of junk.

Your sitemap should contain only your best, most valuable, indexable pages. It should only have:

- Pages that return a

200 OKstatus. - Pages that are the

canonicalversion. - Pages that do not have a

noindextag. - Pages that are not blocked by

robots.txt.

A clean, curated sitemap is a powerful signal. A bloated, auto-generated one is just more noise.

Does Google Even Care About <lastmod>?

The <lastmod> tag in a sitemap tells Google the date a page was last modified.

Does Google trust it? Only if you use it honestly. If you update your <lastmod> date for every page on your site every single day without actually changing the content, Google will learn to ignore it.

But if you use it correctly—only updating the date when the content has actually been significantly changed—it can be a great signal to encourage Google to re-crawl that specific page. It helps Google prioritize its “refresh” crawls.

What’s the Secret Sauce for E-commerce and Faceted Navigation?

This deserves its own section because it is, without a doubt, the #1 killer of crawl budget on the web.

Are URL Parameters Eating Your Lunch?

Faceted navigation (letting users filter by size, color, brand, etc.) is great for users. It’s a disaster for bots.

A user can land on your “Shoes” category and create URLs like:

.../shoes/?size=10.../shoes/?brand=nike.../shoes/?size=10&brand=nike.../shoes/?brand=nike&size=10(This is often a separate, duplicate page!)

This, my friend, is a “parameter black hole.” You’ve just created a near-infinite number of low-value, duplicate-content pages for Google to crawl. This was the exact problem my client had.

How Can I Let Users Filter Without Confusing Google?

You have to attack this with a multi-layered solution.

- Block in

robots.txt: For 99% of sites, the best solution is toDisallowall parameters you don’t want indexed. For example,Disallow: *?size=. This saves your crawl budget instantly. - Use the Canonical Tag: On those filtered pages (like

.../shoes/?size=10), the HTML should have arel="canonical"tag in the<head>that points back to the main category page:<link rel="canonical" href="https."."./shoes/">. This tells Google, “I know this page looks duplicate. Please consolidate all ranking signals to the main/shoes/page.” - Use GSC’s (Old) URL Parameters Tool: This tool is officially deprecated, but the settings are still respected by Google. You can use it to tell Google how to handle specific parameters (e.g., “ignore

size“). - Google’s Official Advice: For a really deep, technical dive, you should read Google’s own guide on faceted navigation. They outline advanced techniques like using AJAX to load content without creating new URLs.

For my client, the solution was a combination of #1 and #2. We aggressively blocked all parameter combinations in robots.txt and made sure the canonical tags were perfect. Within three weeks, their crawl stats completely changed. Googlebot stopped crawling the junk, discovered their new product lines, and sales followed shortly after.

My Site is Huge. What Are the 80/20 Wins?

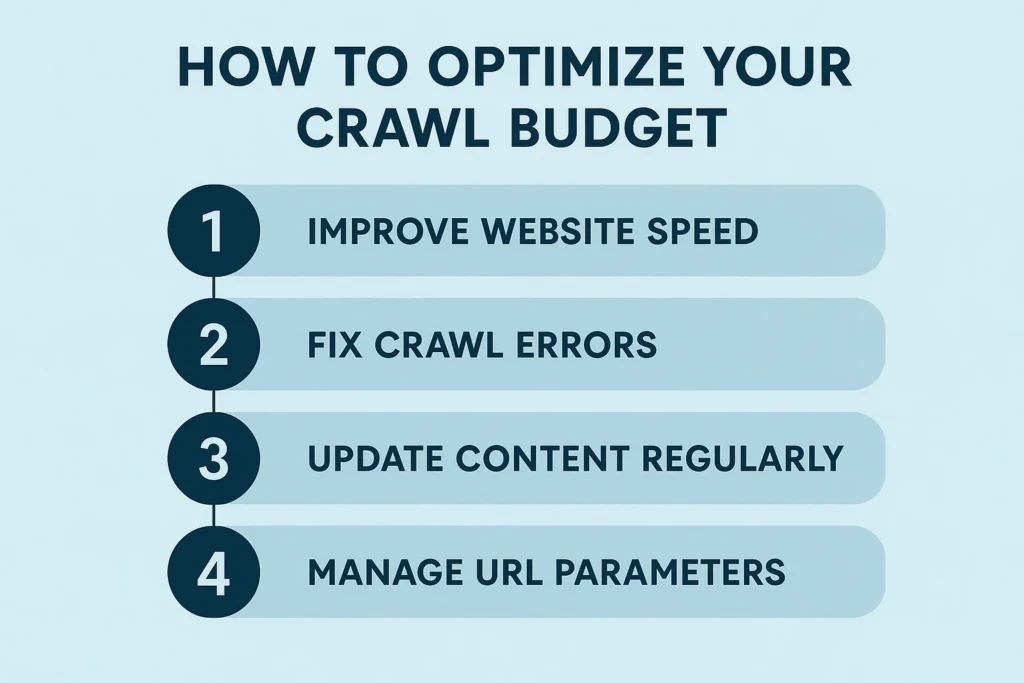

Okay, 3,500 words is a lot. If you’re feeling overwhelmed and just want the high-impact checklist, here it is. This is your 80/20 for crawl budget optimization.

- Fix All 5xx Server Errors. This is non-negotiable. Your server must be stable.

- Aggressively Block URL Parameters in your

robots.txtfile. This is the #1 win for e-commerce. - Audit and Fix Redirect Chains. Find them, and update all internal links to point to the final 200 OK destination.

- Prune Your “Zombie Pages.” Do a content audit. Find low-value, no-traffic pages and either improve them or

noindex/delete (410) them. - Create a Clean, Curated XML Sitemap. Your sitemap is your VIP list, not your phone book. Only include 200 OK, indexable, canonical pages.

What’s the One Thing You Must Remember?

Crawl budget optimization isn’t a one-time “set it and forget it” task. It’s an ongoing process of technical gardening.

Your job is to make it as easy as possible for Googlebot to find your best content and as difficult as possible for it to get lost in your junk.

You have to actively prune the weeds (low-value content, parameters), pave clear pathways (internal linking), and post clear signs (robots.txt, sitemaps). It’s a constant, active process of guiding and refining.

Master this, and you’re no longer just hoping Google finds your content. You’re telling it where to look.

FAQ

What is crawl budget and why is it important for my website?

Crawl budget is the number of pages Googlebot will crawl on your site within a given timeframe, determined by your site’s health and importance. Proper management of this budget ensures Google can find and index your most valuable pages, which is vital for large or complex websites to maximize visibility.

How can I find out what Google is doing on my website?

You can use Google Search Console’s Crawl Stats report for an overview of Google’s activity, and analyze your server logs for detailed, raw data on Googlebot’s requests, including which URLs are crawled, response codes, and crawl frequency.

What steps can I take to increase Google’s interest in my website?

Boost Google’s crawl demand by regularly publishing or updating content to signal freshness, fixing technical issues like slow server responses and errors, improving internal linking, and removing low-value or duplicate pages that waste crawl resources.

How do I prevent Google from wasting crawl budget on unnecessary pages?

Use your robots.txt file to block access to irrelevant or low-value sections such as admin pages, search results, or parameter-heavy URLs. Also, implement canonical tags on duplicate pages and consider using noindex tags on thin or outdated content to prevent unnecessary crawling.

What are the key technical SEO actions for optimizing crawl budget on large sites?

Key actions include fixing all server errors, blocking URL parameters in robots.txt, fixing redirect chains, pruning low-value pages, creating a curated sitemap with only important pages, and ensuring a clean site structure with shallow internal links to important content.